Breaking Down VisionOS

Building Blocks of visionOS and spatial computing

Creating a vision os application

Creating volumes and 3D objects with Reality Composer Pro

Adding Videos and Audio with Reality Kit

Analyze your surroundings with ARKit

Tips for designing visionOS UI

Tips for designing with vision and motion

Tips for designing for share play

Creating and manipulating Space

Launching UIKIT and SwiftUI apps in visionOS

Enhance Iphone/Ipad apps for shared space

Testing Reality Kit with Reality kit trace

Using ARKit to track and perceive the visual world

How to use Reality Composer Pro

Utilizing Safari Development Features

Using Quick Look In Spatial Computing

What is visionOS?

VisionOS is an SDK Apple has released to provide developers with tools to build applications on the Apple Vision Pro. The visual technology of the vision pro creates an environment where users can view and interact with applications in 3D space. Apple describes this new development in 3D space as spatial computing. As I go through the documentation, I will post my findings and thoughts below!

Building blocks of VisionOS

Apple describes the following as the core components that are the building blocks of spatial computing.

Windows

Windows are SwiftUI views that can display 2D and 3D content. The type of content appearing in windows is comparable to views being displayed in current iOS applications.

Volumes

Volumes are 3D swiftUI scenes that showcase 3D content created with reality kit or Unity. The 3D dimensions of volumes make them visible from any angle.

Space

VisionOS gives developers the ability to manipulate space in order to give users dynamic experiences. Space can be categorized as “shared” in which windows and volumes from different applications would appear next to each other. Other variations of space can be considered “fully immersive” in which a user is completely engulfed in the experience of a particular application..

Principles of Spatial Design

Below are some rules and guidelines to keep in mind when developing spatial applications.

Keeping Apps Familiar

Use family components and design patterns from SwiftUI and apple human interface guidelines to provide a relatable experience for users. The components will be displayed in Windows.

These windows would remain small, can kept to around 1-2 windows per application. App window sizes should be configured based off of ideal app preferences.

Points, will be used to designate size of windows and other UI components

Keep your app, Human Centered.

When designing spacial apps think about what a user can see and where they can move

Place main content in the center of a users view

Design with ergonicis in mind

Place object in comfortable dimensions

Place content aways from arms reach

Avoid placing content extremely high or low

Avoid anchoring content to peoples view, this will block line of sight

Create experience that requires minimal movement

Use the default Digital Crown to reset window scene

How to create a visionOS application?

How to access the visionOS SDK?

In order to access visionOS SDK on Xcode I needed the following upgrades:

Mac OS Ventura 13,5

Xcode 15.0 beta 2

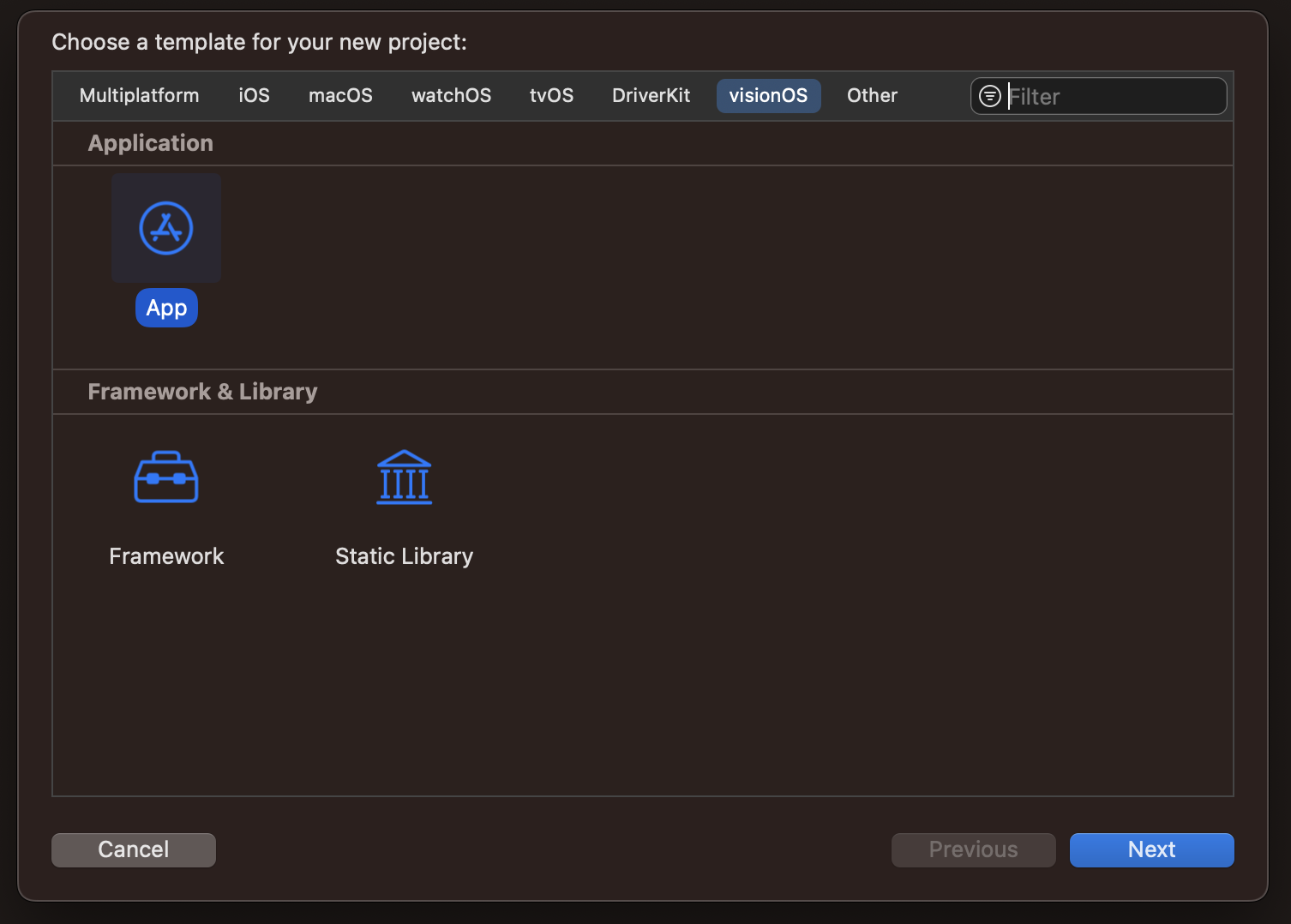

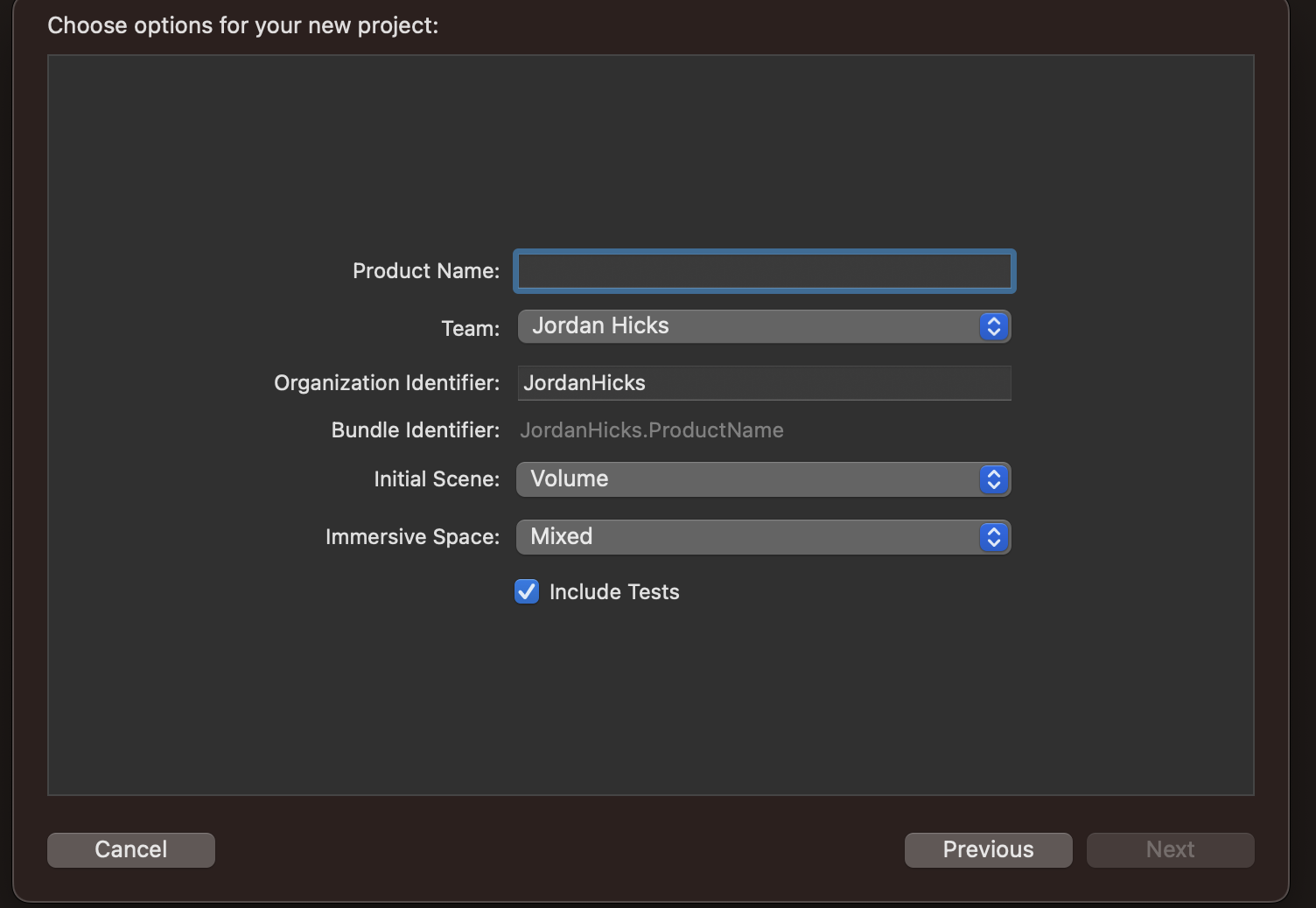

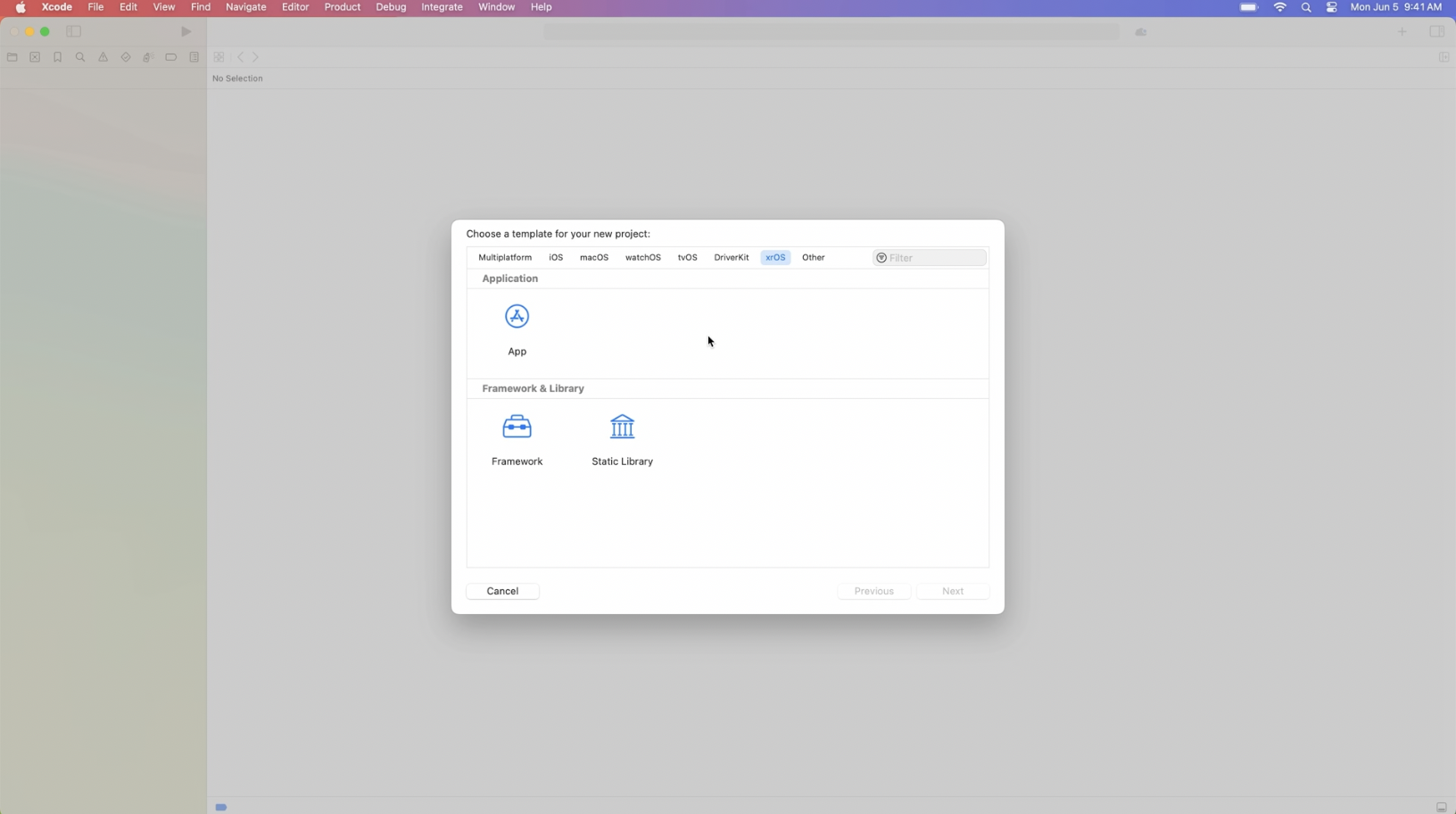

Once the necessary Xcode version is installed you can create a visionOS app by selecting the visionOS tab when creating a new project. After selection visionOS, users can designate their initial scene to be either a window or a volume. In addition, users can designate the space in which that object will appear

How to create and modify 3D objects in visionOS?

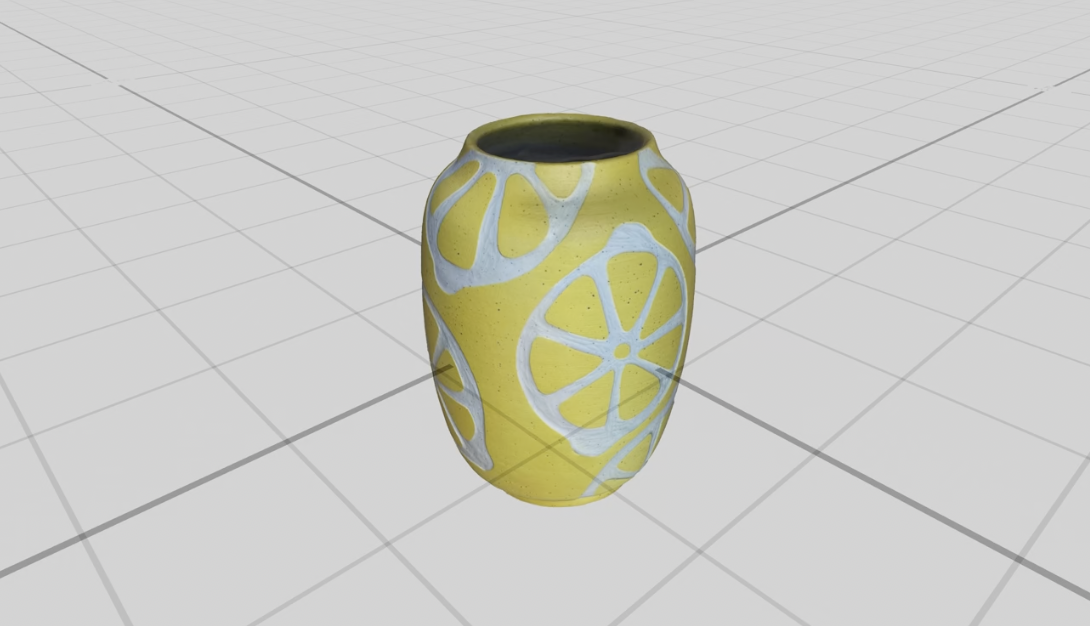

Volumes are containers that hold 3D UI objects that can be displayed in open space on vision OS applications.

In order to create volumes and modify 3D objects, download and install Reality Composer Pro on your machine.

https://developer.apple.com/augmented-reality/tools/

Create a new visonOS App in Xcode, designating the initial scene as a Volume

When displaying 3D content you must make the WindowGrpup of you app volumetric.

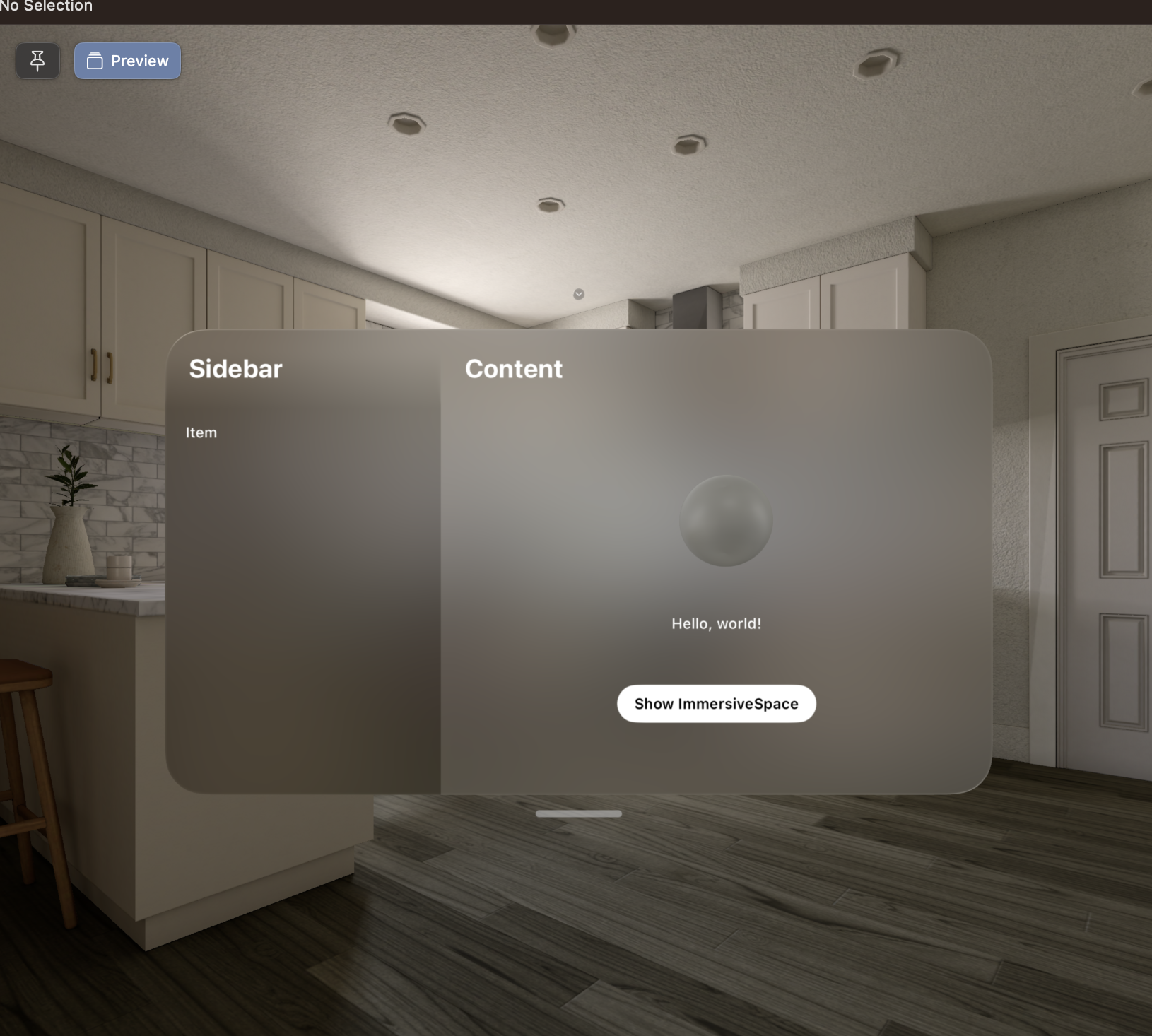

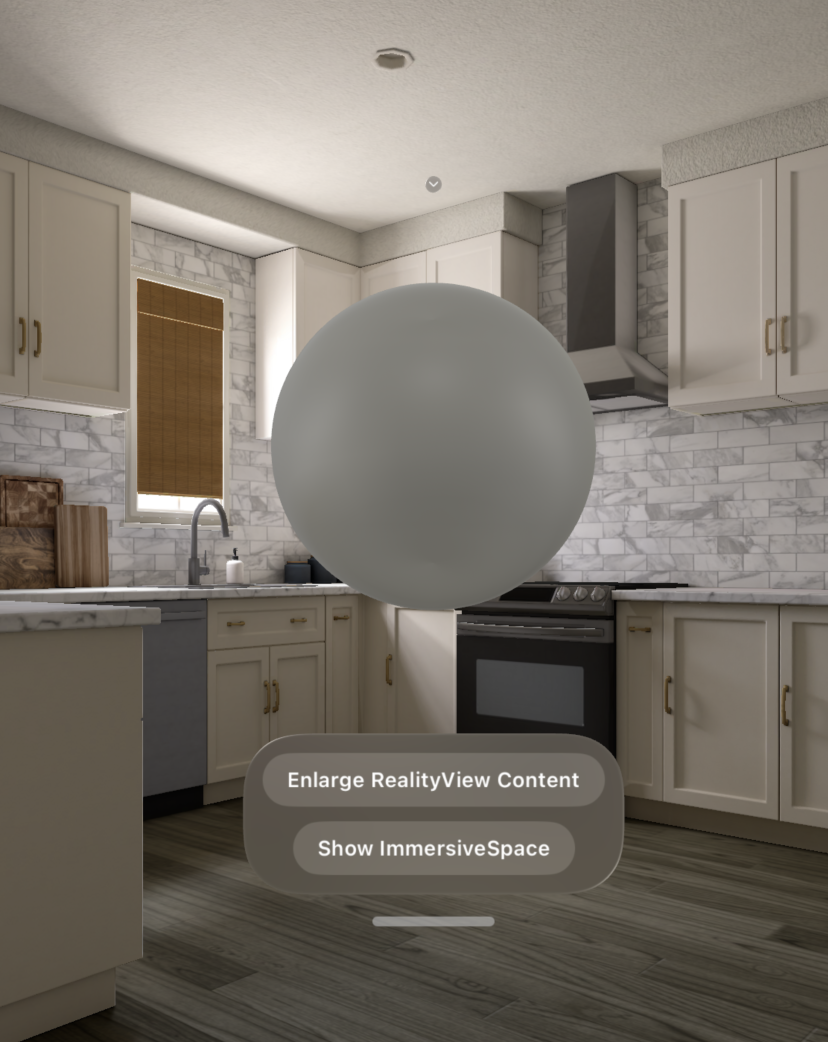

The default generated project will create the sphere object you see above.

The entire view including the sphere the associated windows are generated with the code on the left

The sphere specifically is created by utilizing RealityView in the SwiftUI view

The first closure in the reality view is used to designate a scene for reality view to build. A scene is a customizable entity object that tells reality view what to display

The Second closure is an update closure that applies changes based off of a state change.

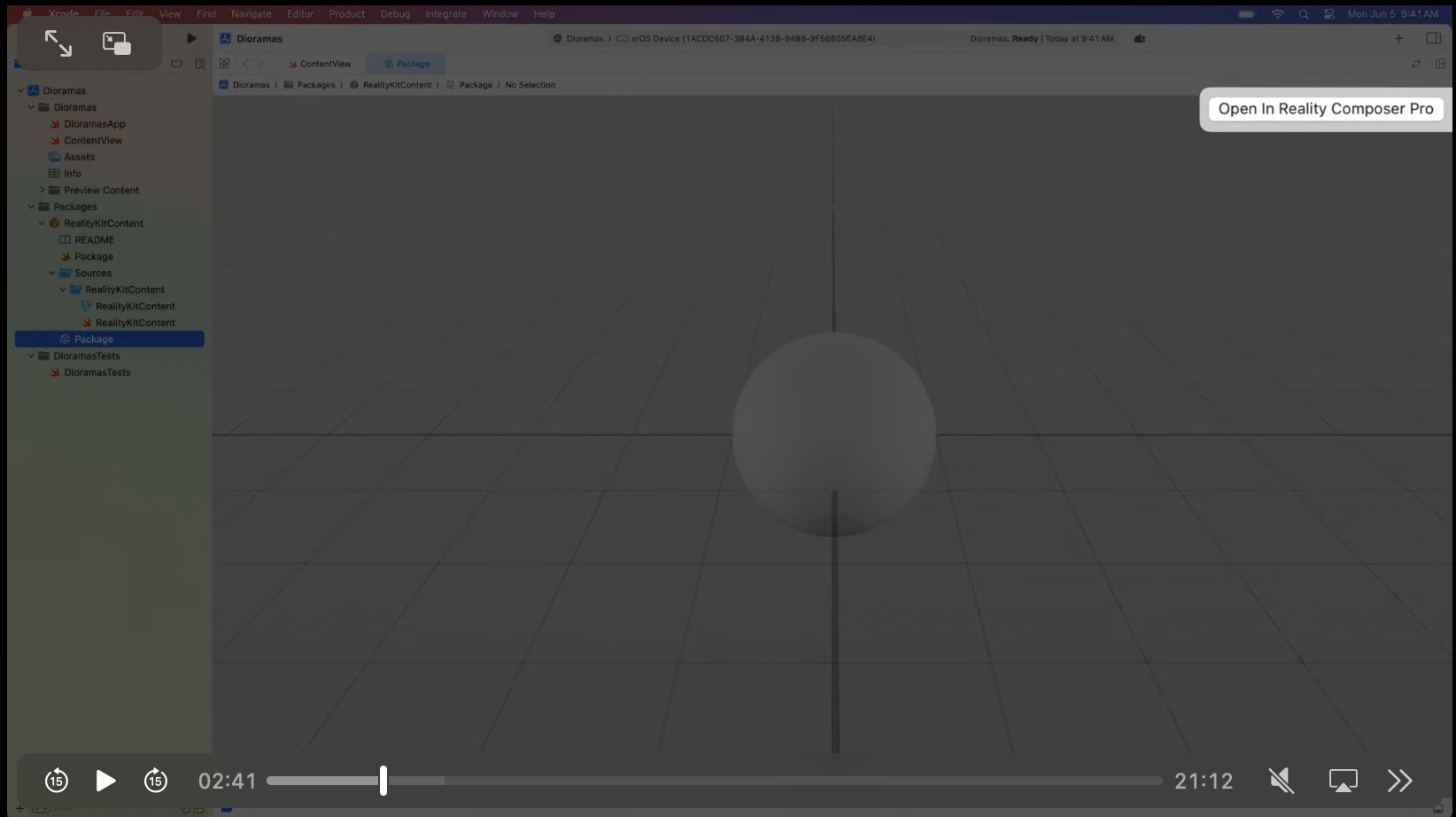

To create new scenes, click on the project file in the reality kit content. Upon opening the file you will see the current scene suspended in a 3D grid. In the top right corner click “open in Reality Composer pro” to edit and add new scenes to your application

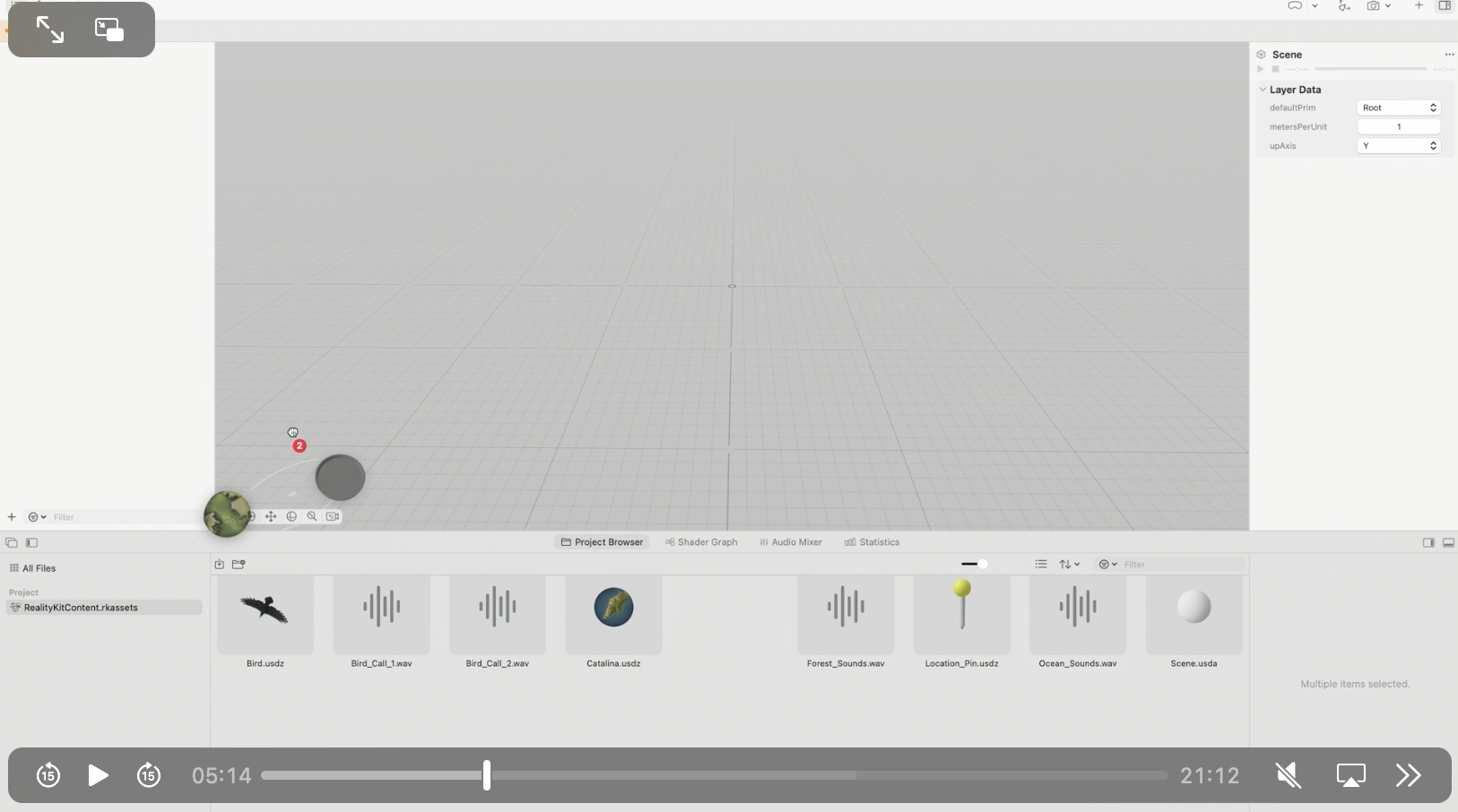

Reality Composer offers a full studio where you can edit and add new scenes

For example by pressing the + icon in the scenes window, you can add primitive shapes such as a cube

Upon saving your changes, the new modifications to your scene will appear in your view

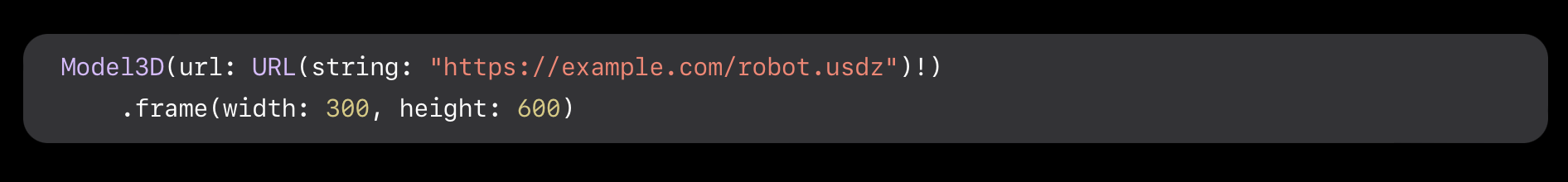

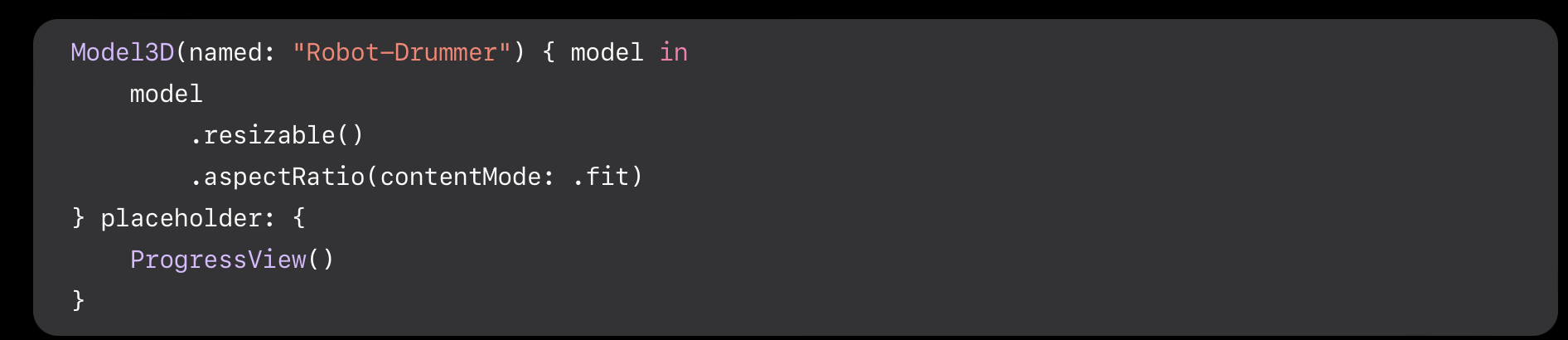

Another way to display 3D content in volumes is with Model3D. If you have a USD file/Reality File saved in your project or a URL to one of those files, you can render that file by designating the files name with Model 3D.

Adding Animation to 3D objects using Timeline View

Timeline view is a new swiftUI view that updates a view over a given time. This can be utilized to add constant animation to volumes. In the example to the left, rotation is provided to the volume celestial object by calculating the rotated angle on a cadence set by the time line view

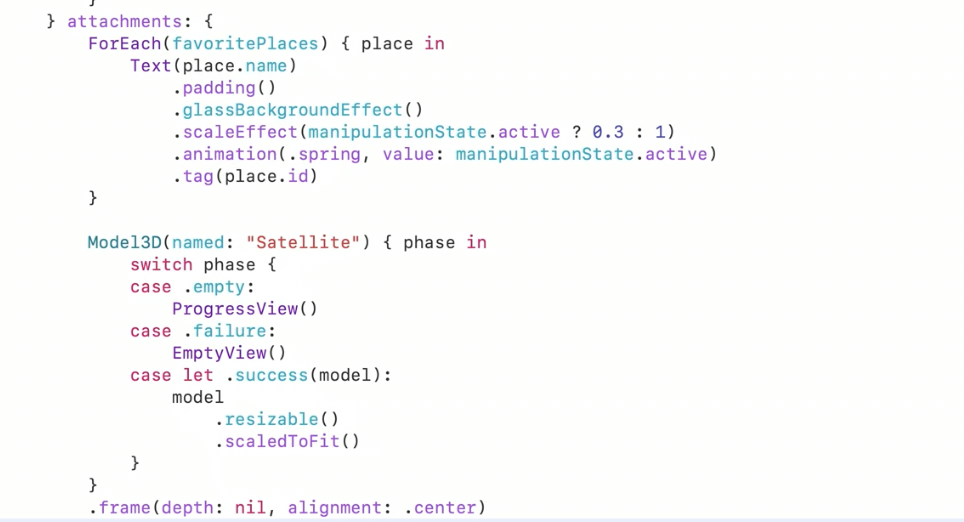

Adding windows and 3D objects to complement your 3D objects using attachments

Attachments are new properties that can be added on to swiftui views to add additional ui elements

Gestures can also be added to volumes to give them reactive actions after a user interacts with them

Features available in reality Kit

Make it stand out

Utilizing the reality kit frame work is a great way for developers to access a suite a features to make Augmented reality development faster and better in visionOS. Features made available by reality kit include photo-realistic rendering, camera effects, animations, spatial audio, and rigid body physics.

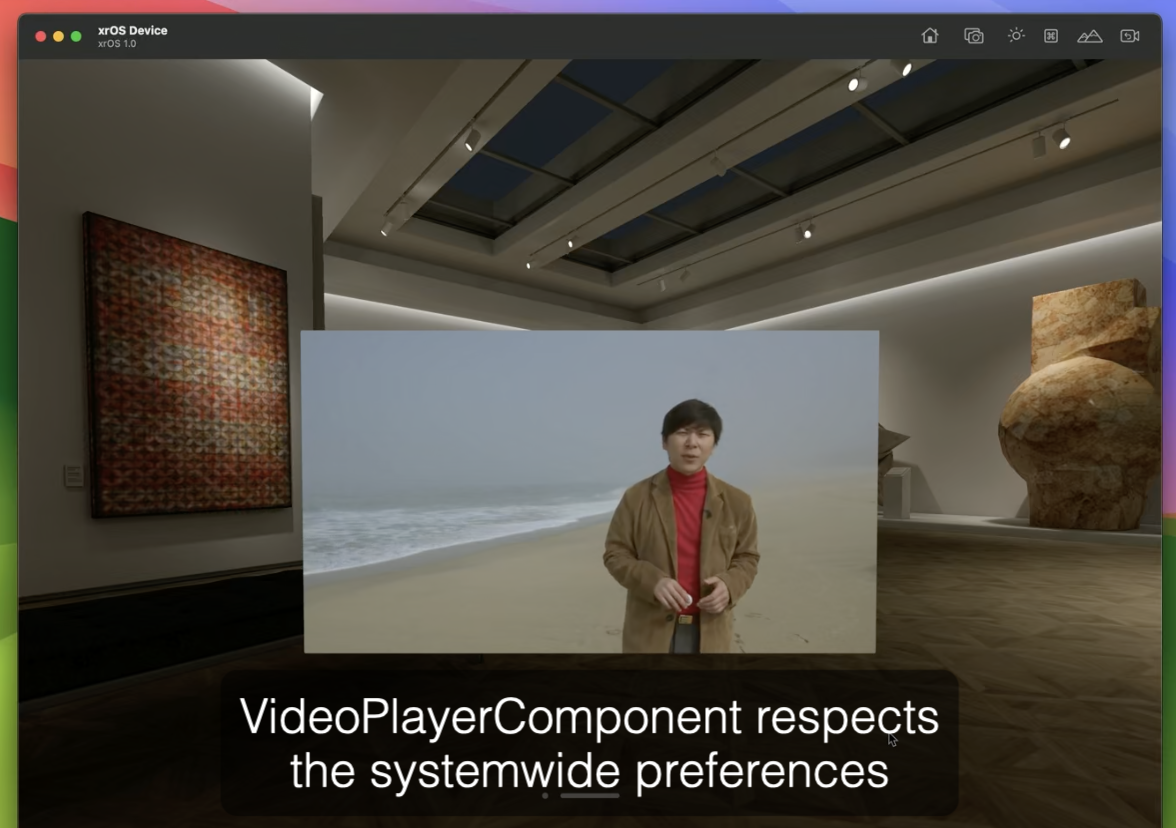

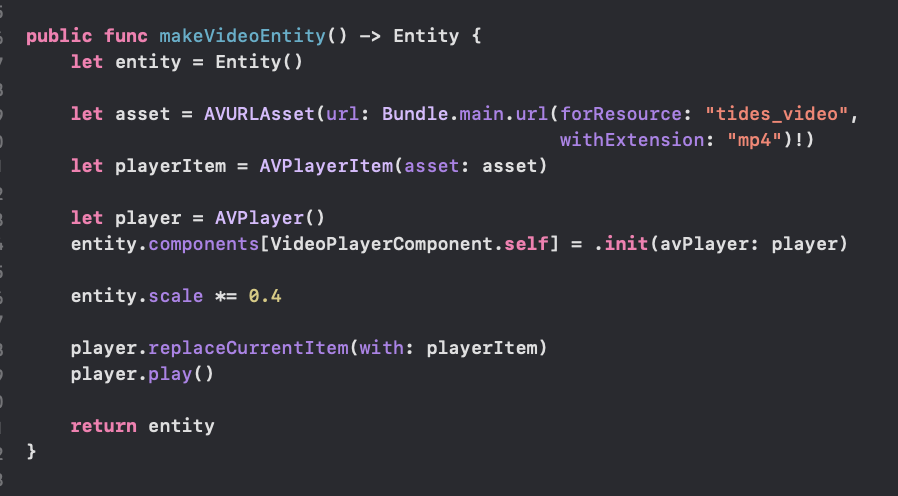

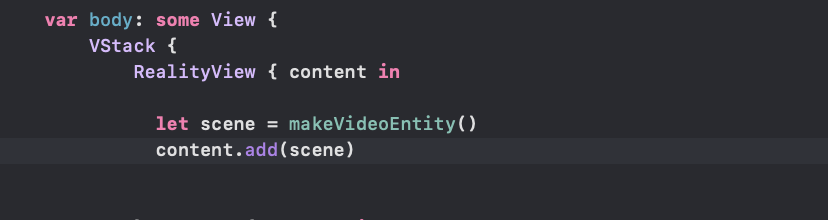

Adding Videos using Reality Kit

Reality Kit can be used to display video play back in your app. By utilizing the AVKit frame work, an AVplayer when passed a url of an asset can be converted to an entity. This entity can be added to RealityView to display videos.

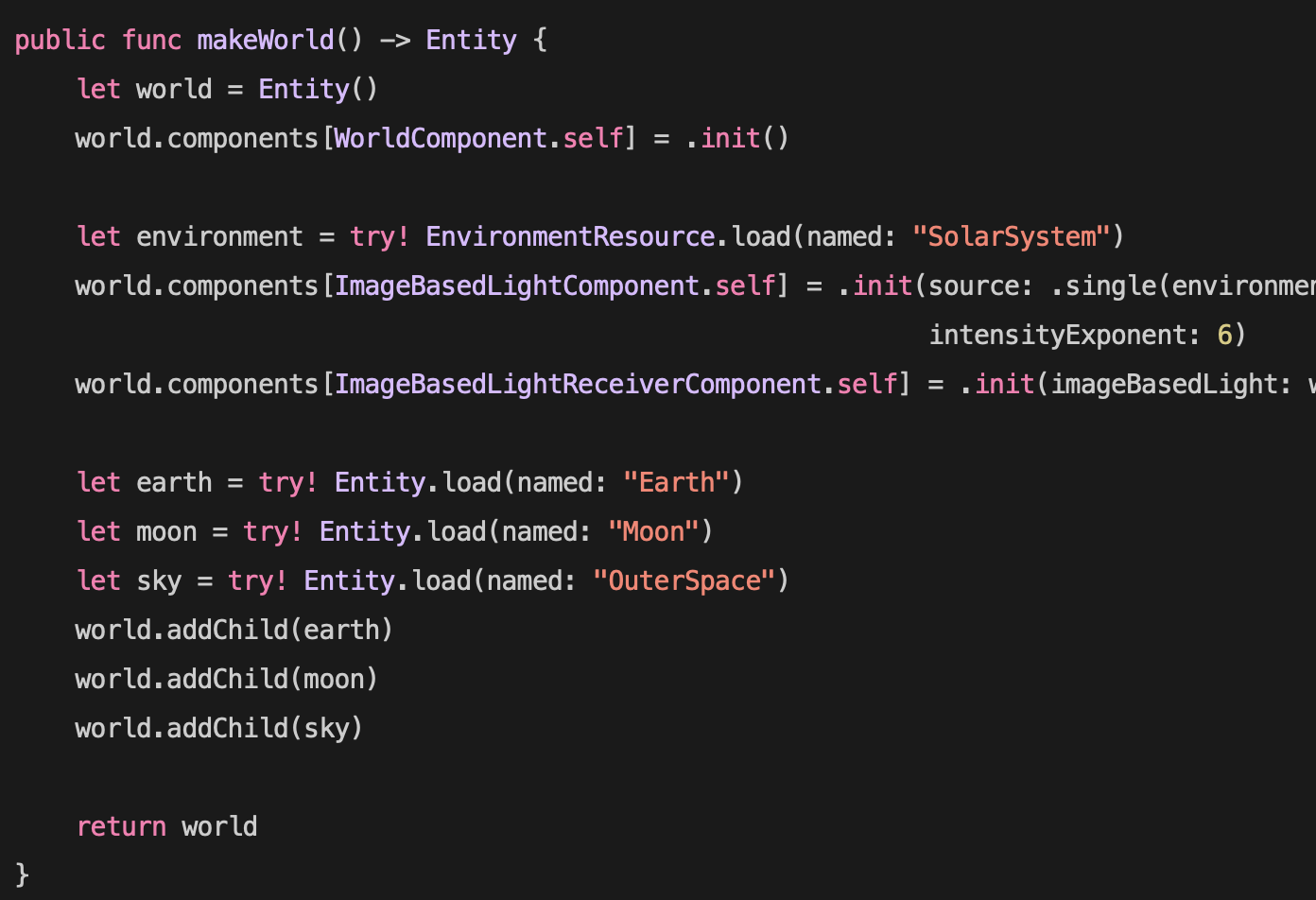

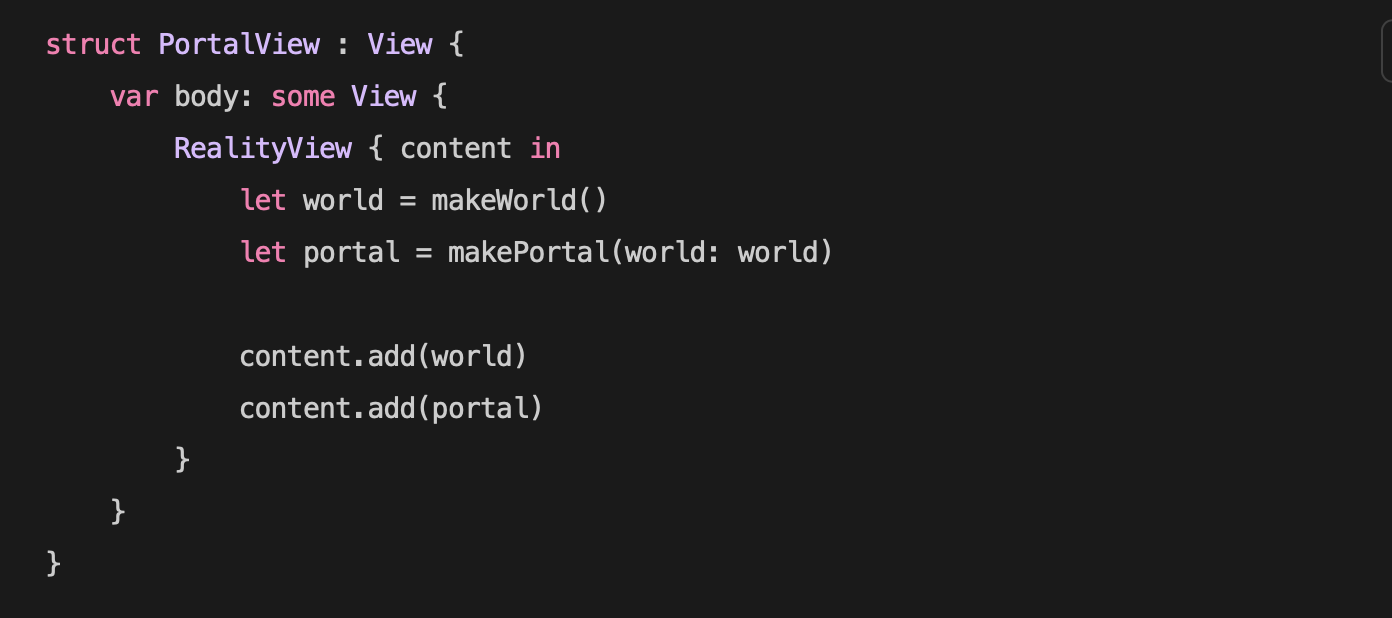

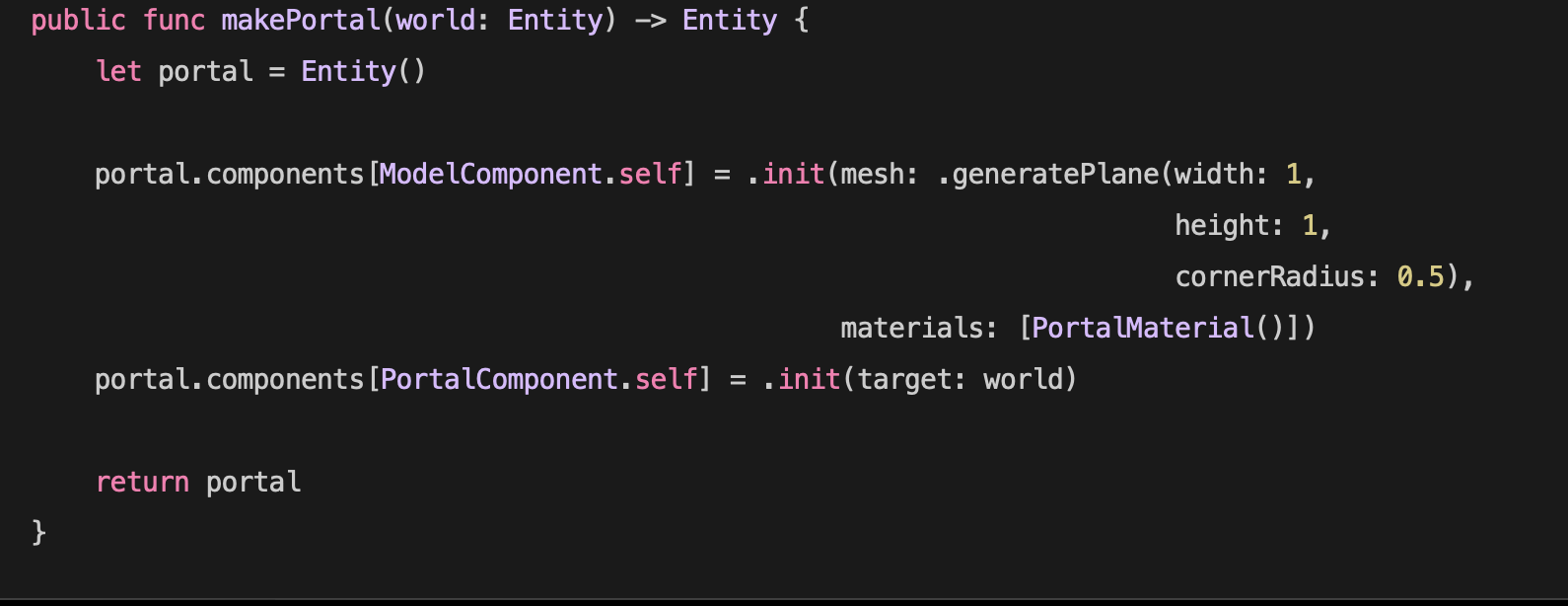

Creating Worlds and Portals using Reality Kit

In reality kit, worlds are container entities that contain other encapsulated entities only accessible in that world. In the below example, a world entity is created that contains three 3D entities of a moon, earth, and a sky. In order to see these entities you would need to transition to this world by way of a portal. A portal acts as a transition entity, that segues users from one world into another. This ability to separate entities by different worlds showcases how much of a huge canvas visionOS gives developers when creating.

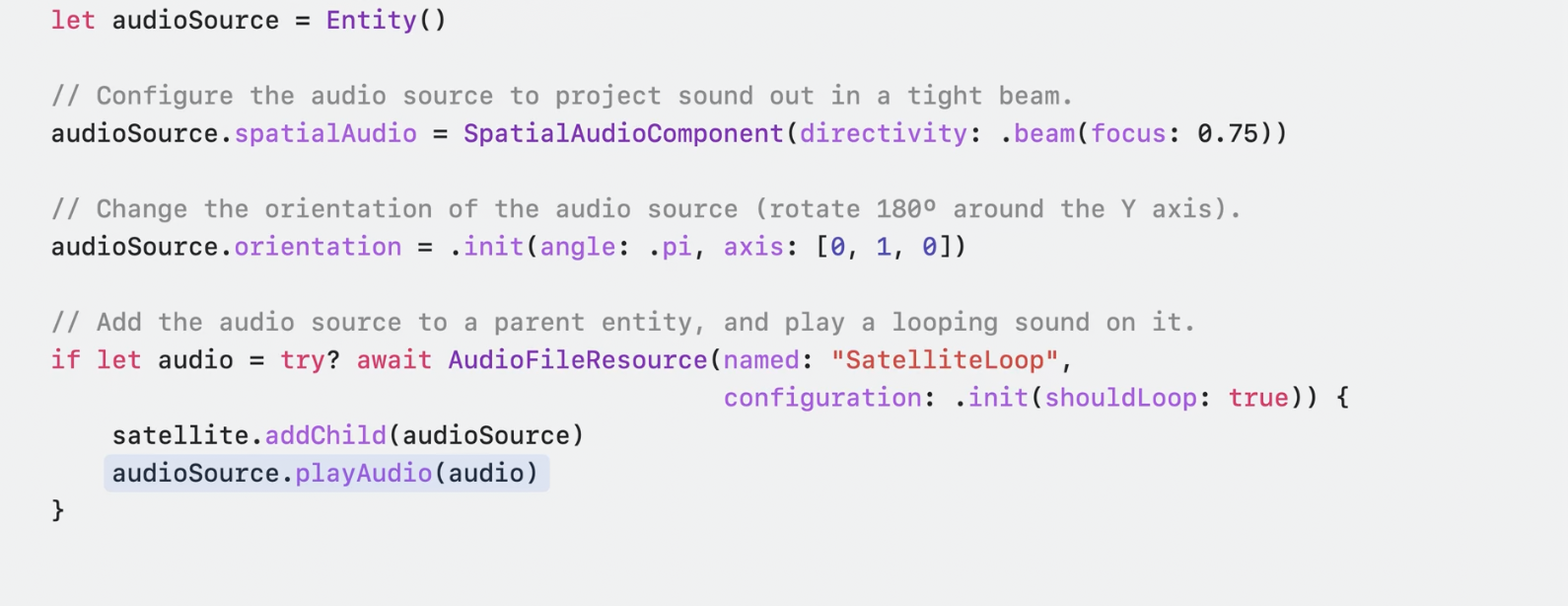

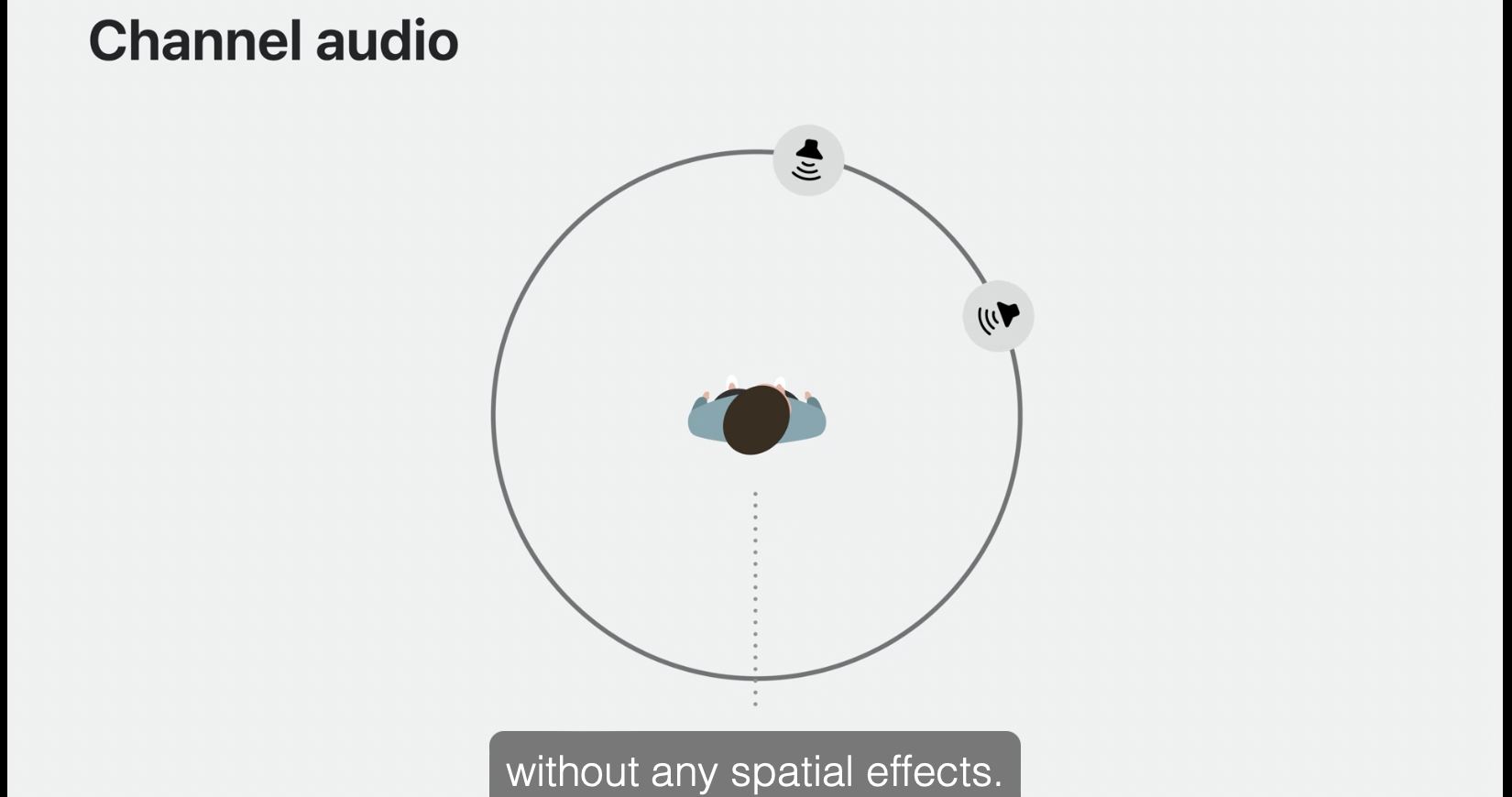

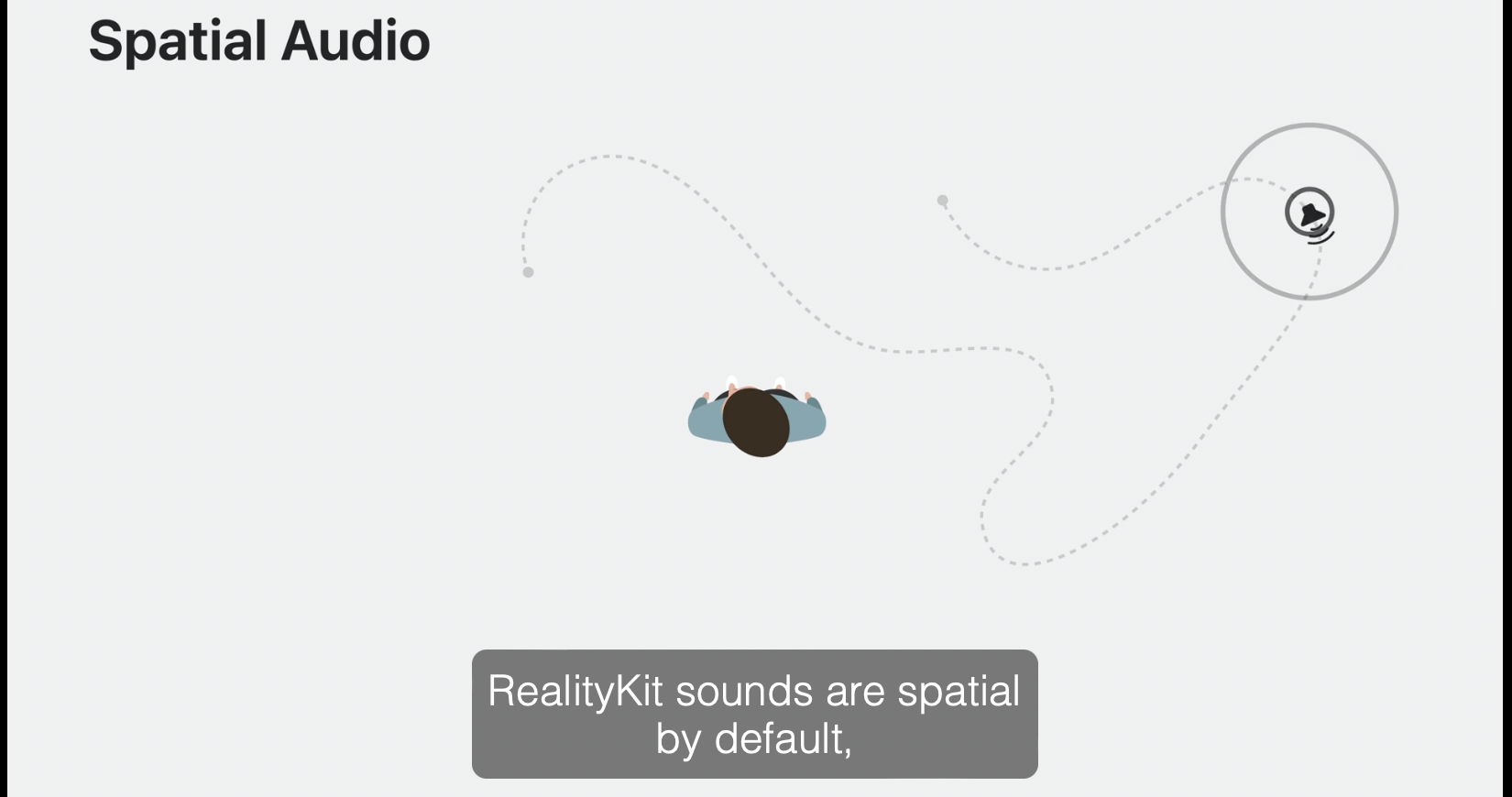

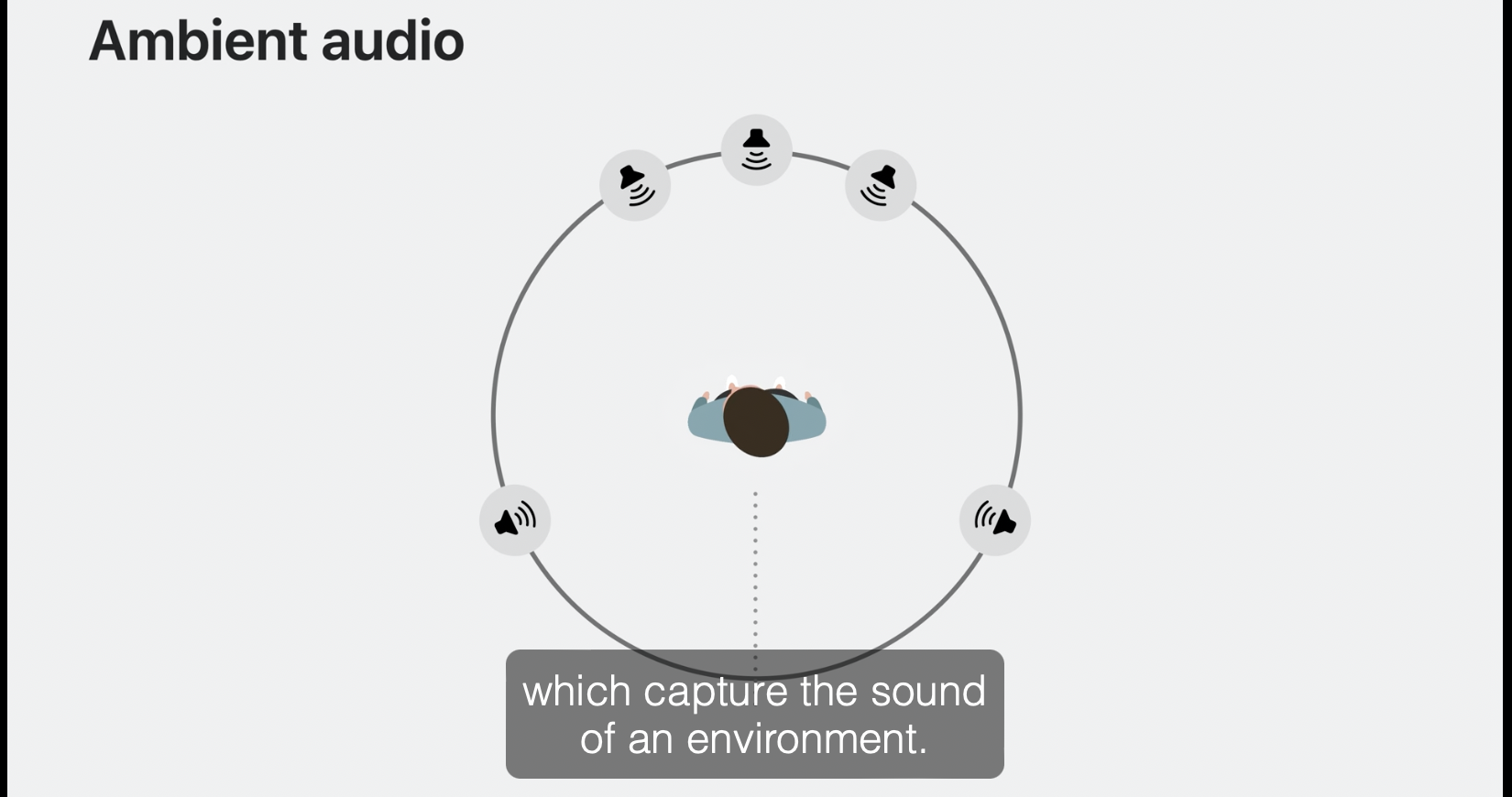

Adding Audio using Reality Kit

Reality kit also gives developers the ability to assign audio to components. In reality kit sound can be designed to have 3 states, channel, ambient, and channel. Ambient audio works by emitting sound from multiple sources surounding a user. This audio is good to duplicate atmospheric sounds such as background music. Channel audio is good for focusing sound from a specific direction, and spatial audio works well when assigning a moving object with sound. Sound can be classified and designated in an entity object and the sound emitting parent entities can inherit these audio sources. In the example code, a spacial audio entity is created and added to a moving 3D satellite entity.

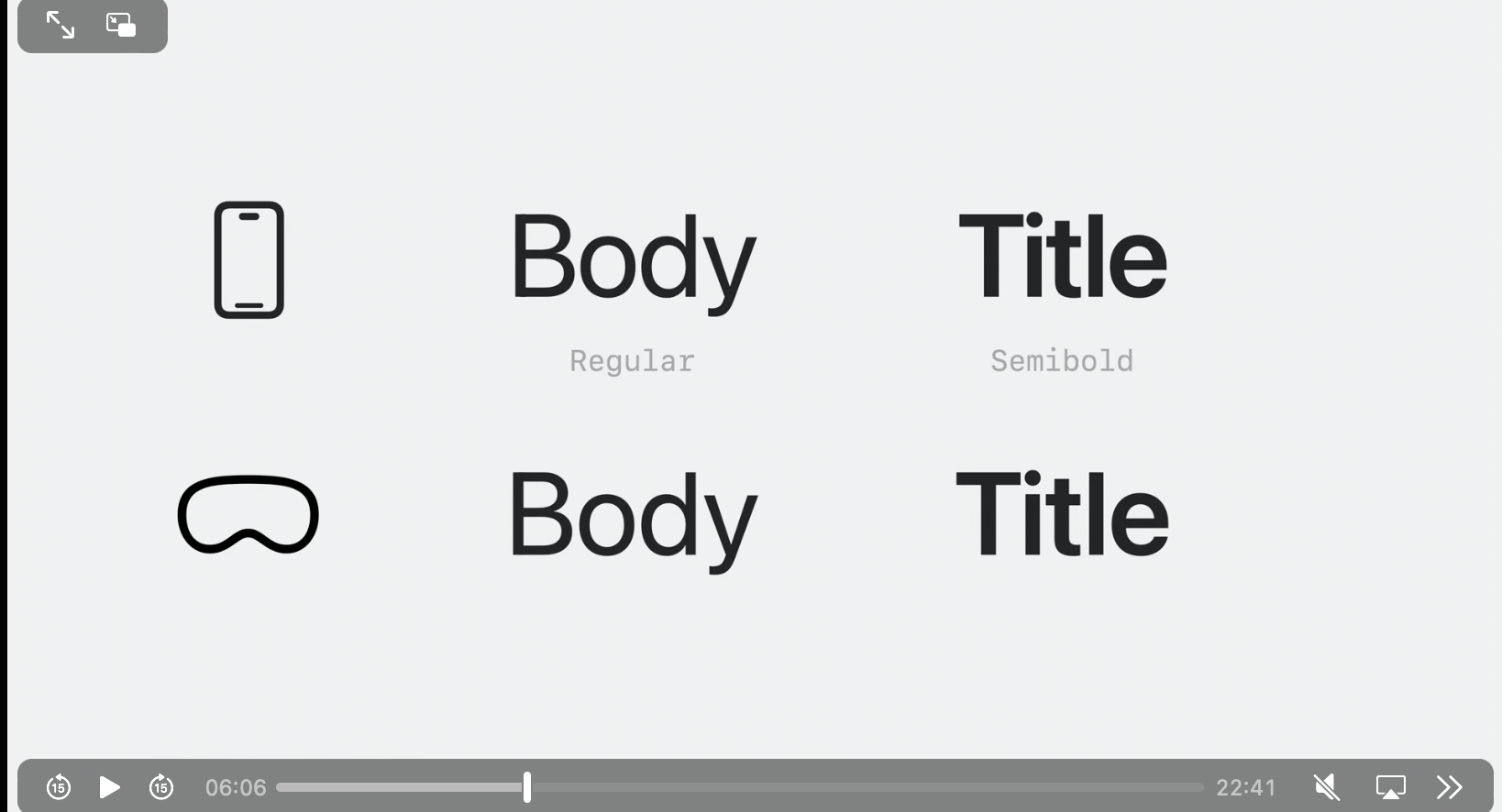

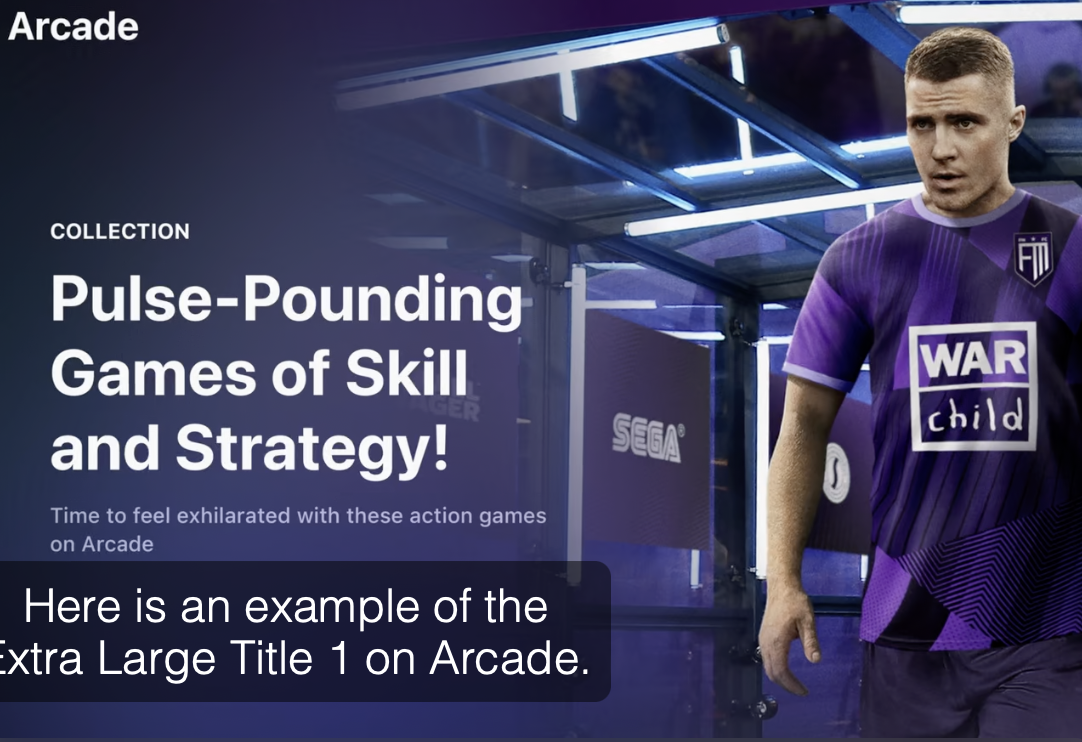

Tips for designing in visionOS

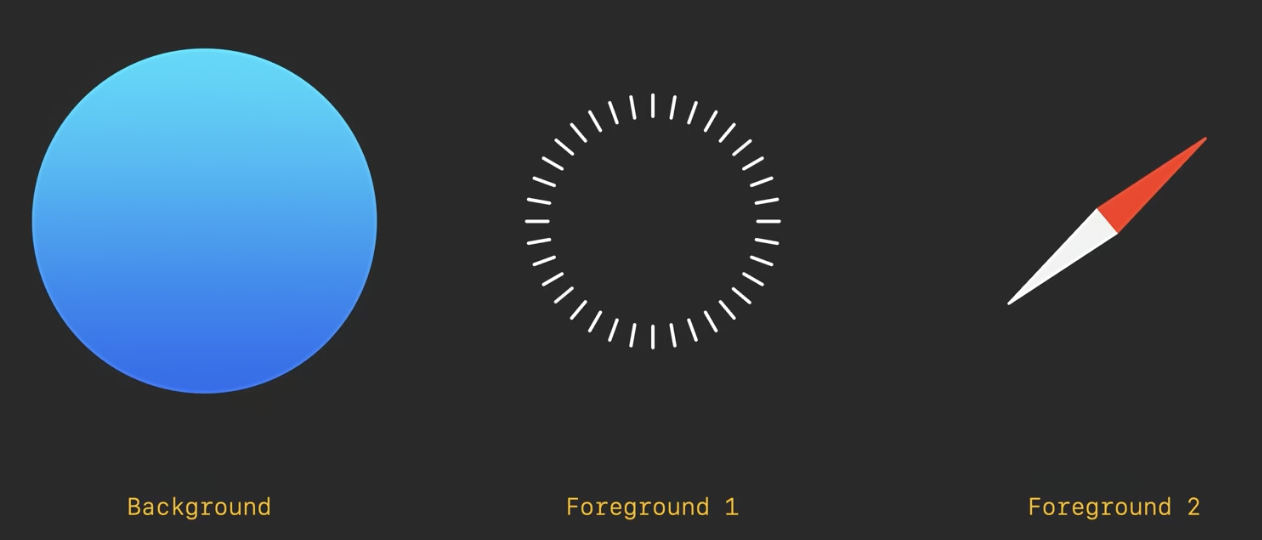

App icons in visionOS are 3D. It is recommended when designing icons to use three layers of UI.

Keep windows transparent to give users context of the outside world

Keep text white and bold for clear communication

Customize vibrancy to give titles or more important text additional focus

Keep important information in the center of the view. Prioritizing adding additional content to the left and right over a users view versus above or below it for ergonomic reasons.

Make buttons a minimum size of 60 points. Keep content in buttons centered. Avoid using white backgrounds for buttons.

Keep corners of windows and ornaments round. Also use dynamic sizing so that windows get larger or smaller as the user gets farther or closer

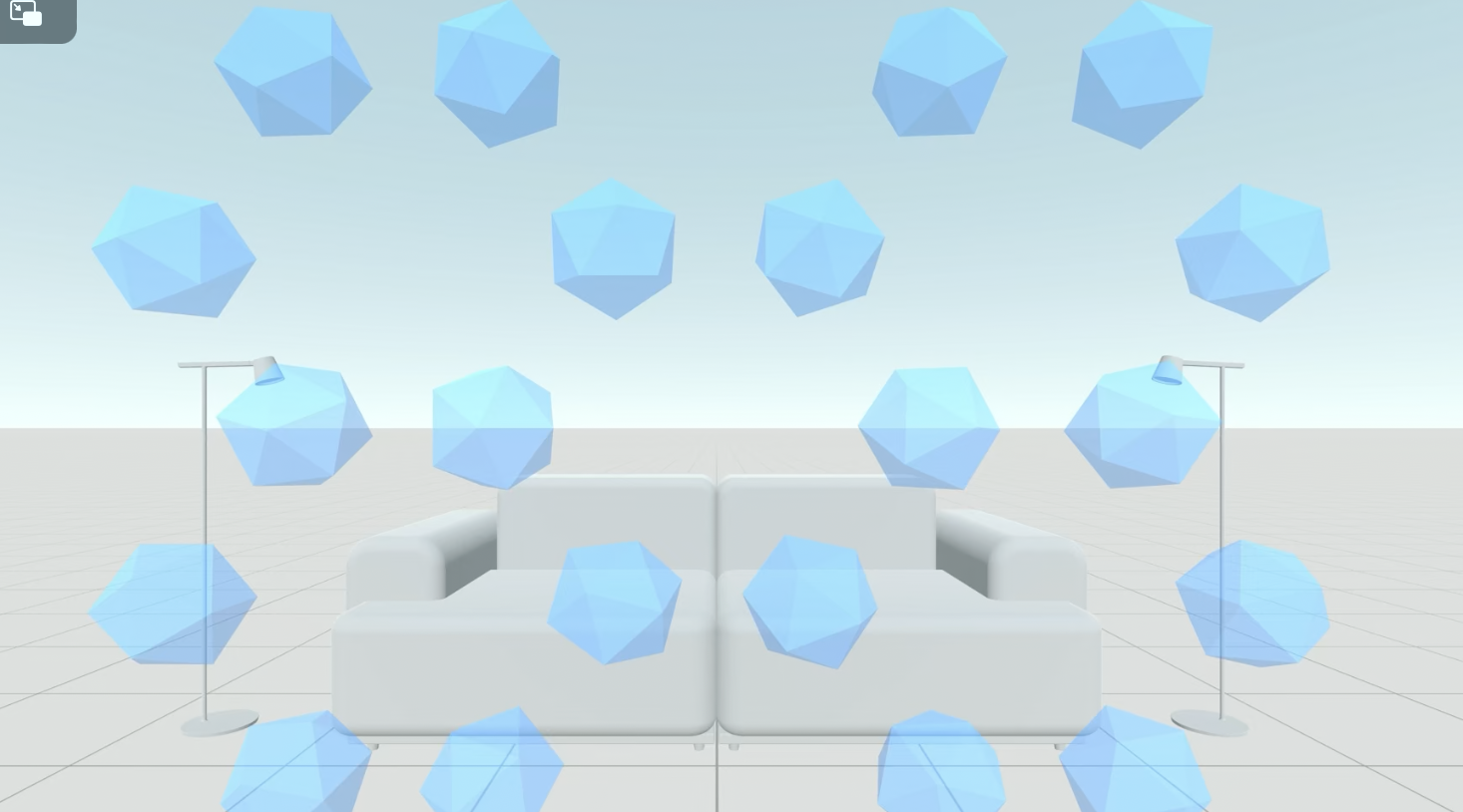

Tips for designing with vision and motion

Provide depth cues.

Depth cues are visual cues humans perceive to get a an idea how close are far something away is. It is important to provide depth cues for your user to create a realistic exrpeciance. Depth cues can be created by using relative size, color, shadow and blue

Any content that requires a user to read needs to be at arms length distance. Also keep text content centered. Make less wide if possible

Understand the comfortable ranges of eye rotation and place content in respect to those ranges. Center, left and right is ok, upward in diagonal not so much.

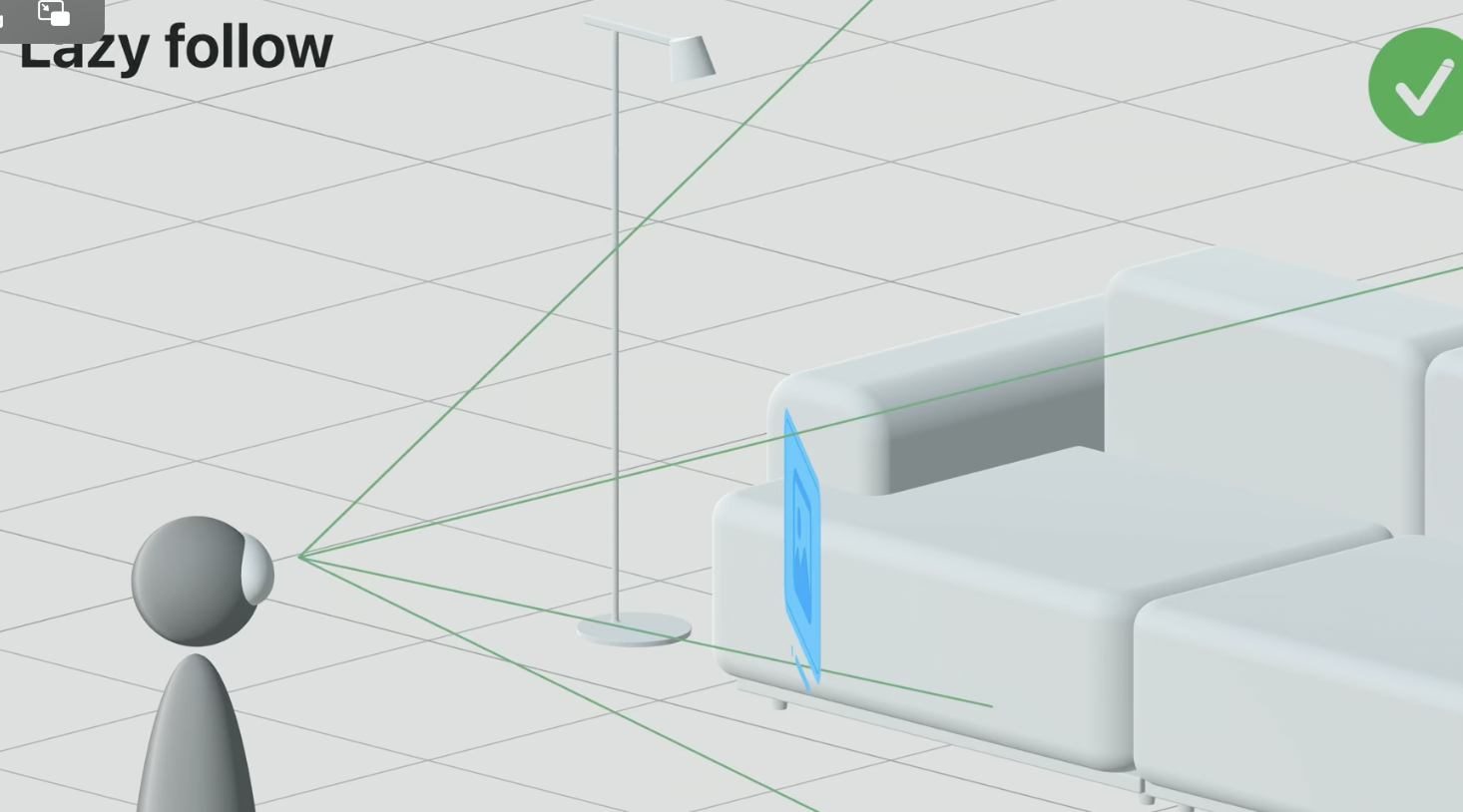

Tips for dealing with objects in motion

When objects are in motion make them slightly transparent to communicate to the user the objects are in motion and not the user

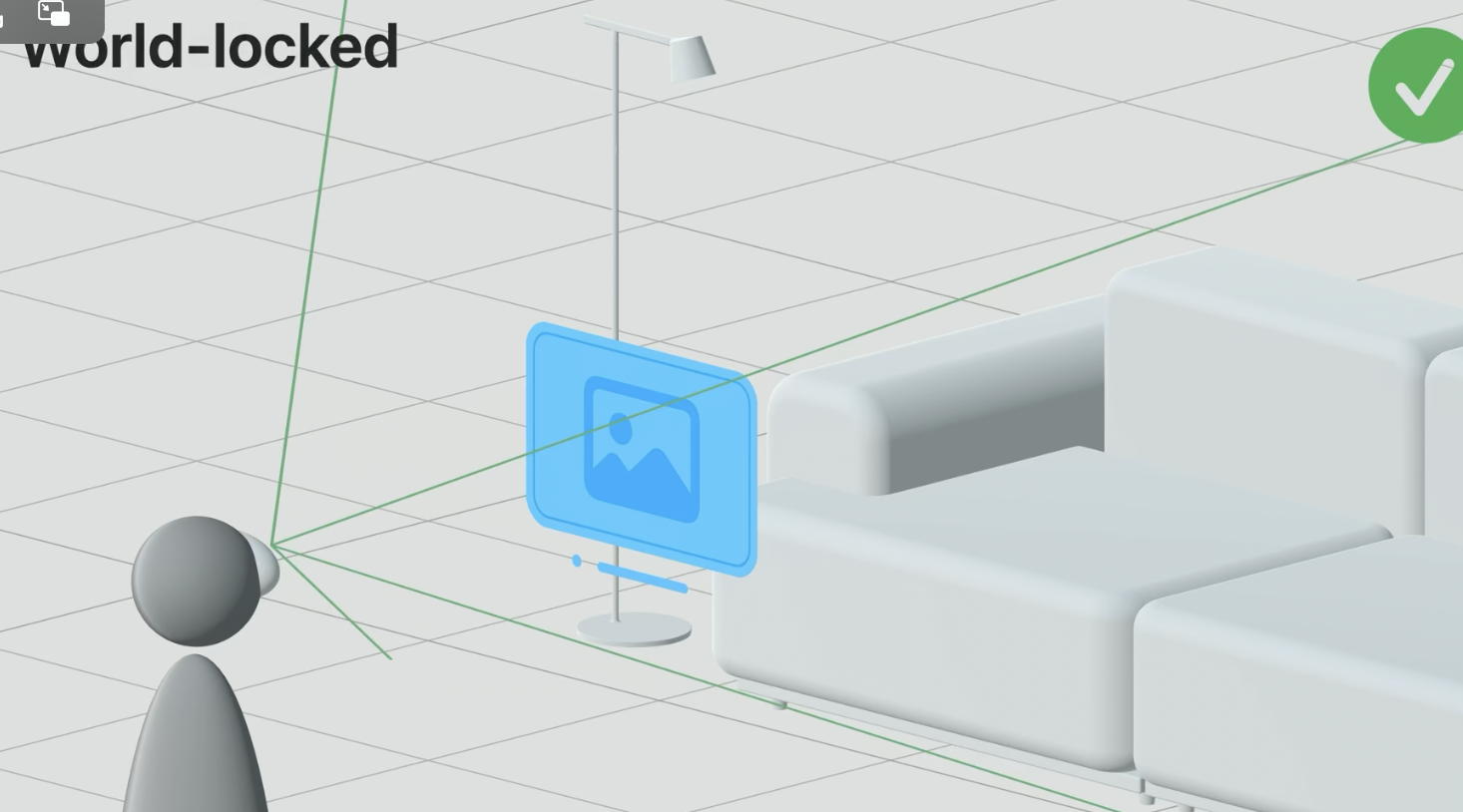

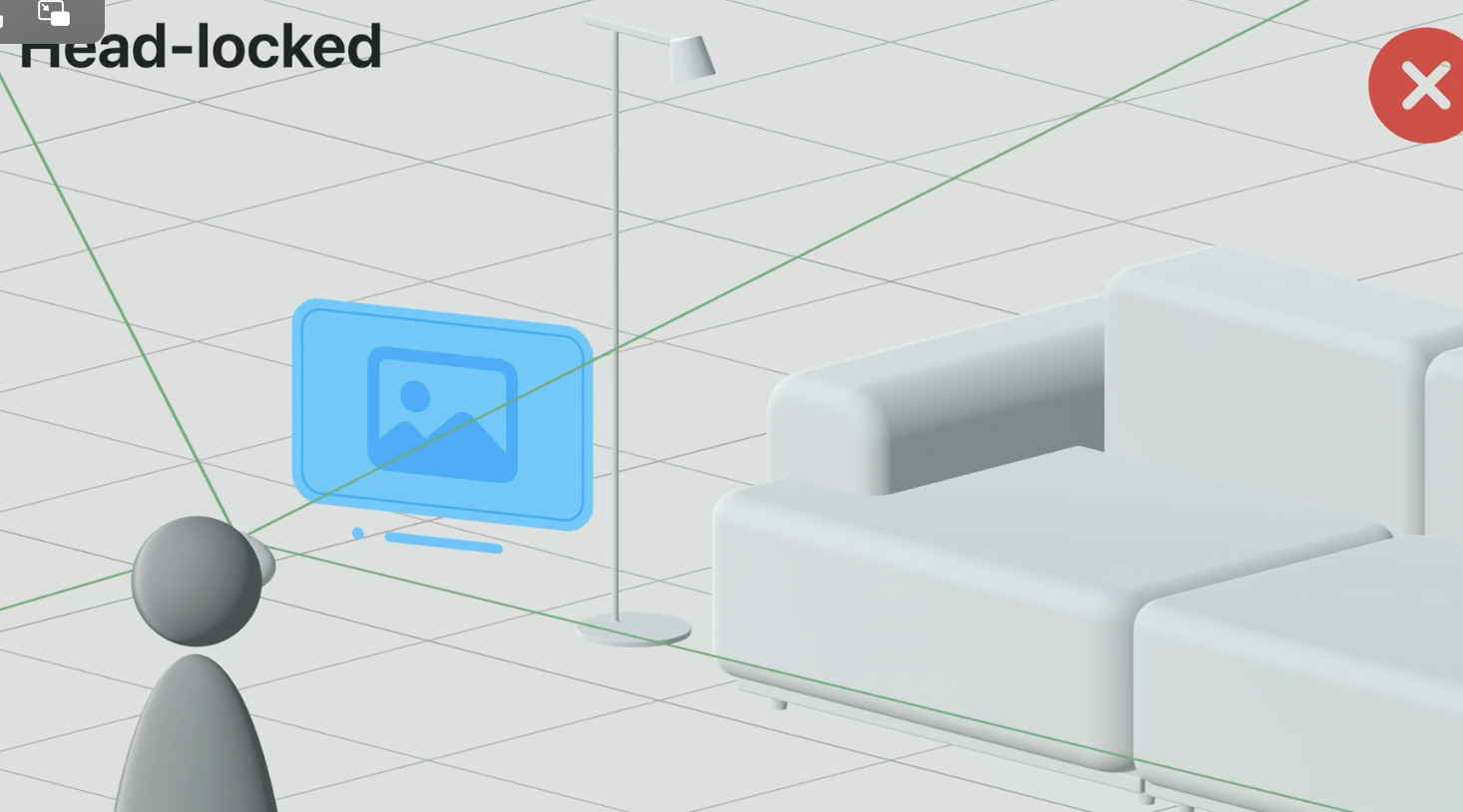

Avoid head locking content. Head lock content occurs when an object follows the line of sight of a user. This can make the experience feel constrained. Make content world locked, meaning objects have static positions, or implement a lazy follow to where objects to stay in a viewers line of sight but they react slowly to when a viewer turns their head.

Avoid ossicalting objects, for they can make a user dizzy

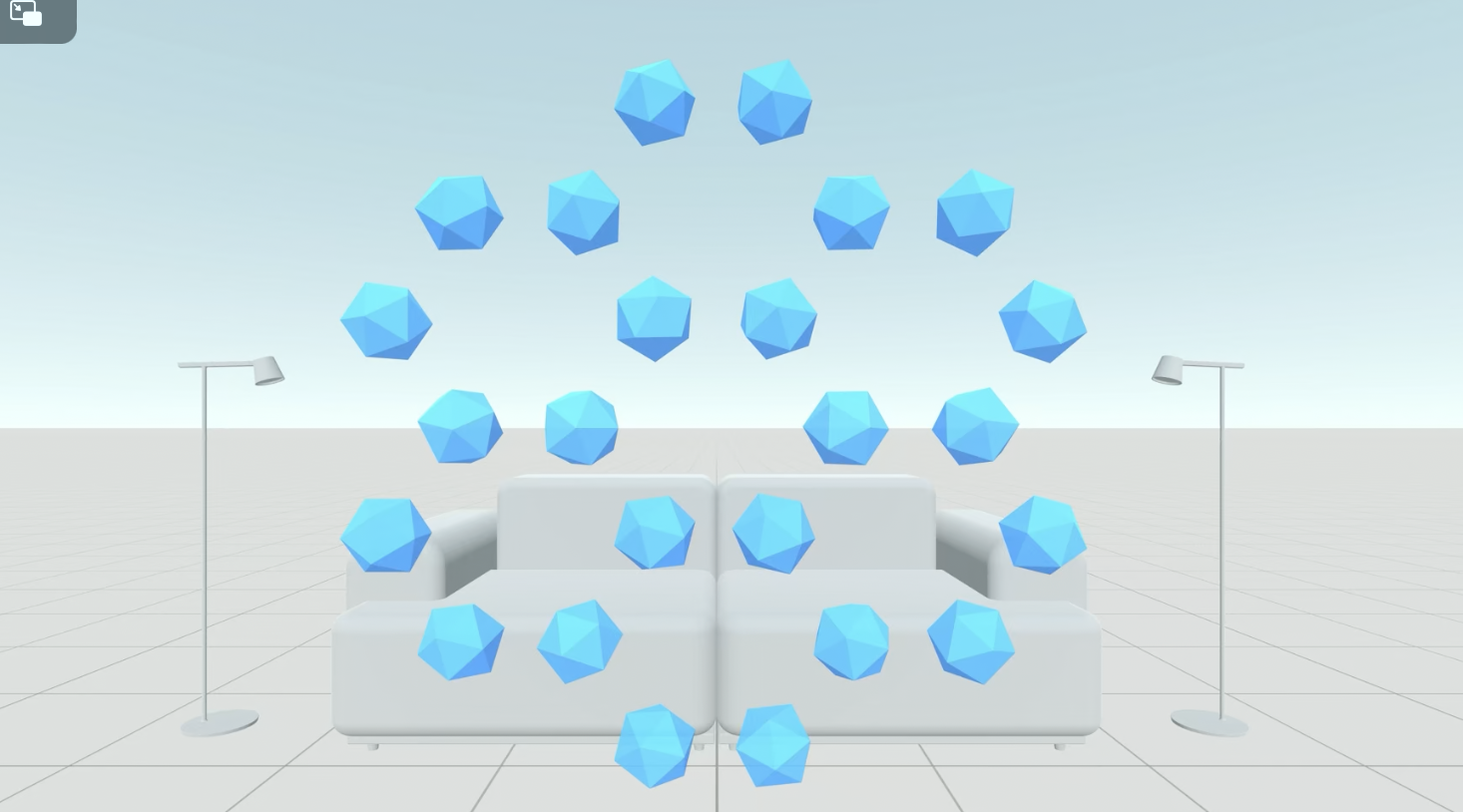

Avoid repetitive large objects passing by a user to avoid an uncomfortable experience

Designing for share play

When designing your app it is important to make sure it is compatible with share play.

Share play allows your app to be launched in a collaborative mode where other users simultaneously experience the application..

When using share play your app is allowed one window or immersive experience to be viewed at a time

Share play can be launched from buttons from a modal pop up window

Share play has three modes in which you can arrange participants.

1. Side by side is good for having users focus on a central window

2. Surround is good when dealing with a volume that users could all group around

3. Conversational is good when dealing with the share play experience featuring the conversation between the users as the primary source of interaction

Even though the app appears to be presenting to all of the user at once, in the code each user is actually experiencing individual version of the app on their own device. This is an example of shared context. Your app should include personalization configurations where users can adjust personalized preferences such as volume, without effecting the volume of the other users.

How to create and modify space in visionOS?

As stated above the, manipulation of open space gives developers an infinite canvas to position and orient applications outside of windows.

Space in visionOS will be referenced as immersive space and has three states:

mixed: The application stands alone and appears in the real world view of the user

progressive: the application manipulates one of the available space and creates a portal between the real world and the space that is manipulated by the application.

full: the application designs all of the open space available to the user.

Space can be added to a project by designating Immersive Space in the App

Embedding content in immersive space allows it to live outside of a window

Immersion style of space can be set using .immersionStyle

Space can be configured programmaticly with state variables in a space view

Launching swiftUI and UIKit app in visionOS

Both UIKit and SwiftUI applications are compatible with visionOS with certain modifications.

In order to launch UIKIt and SwiftUI applications in visionOS, add Apple Vision to supported devices

SwiftUI Views and UIKIT view controllers automatically appear as windows when launched in vision OS, those windows can be scaled by dragging the corner of the window

Enhance Iphone/Ipad Apps for shared space

When launching an iPad/iOS app in shared space the features unique to xrOS must be taken in consideration to understand which new and old features will be compatible

Input Considerations is xrOS

One aspect of xrOS that iPad/iOS apps will automatically adhere is input interaction. In xros users will be able to interact with the UI via tap and touch with hands and potentially game controllers

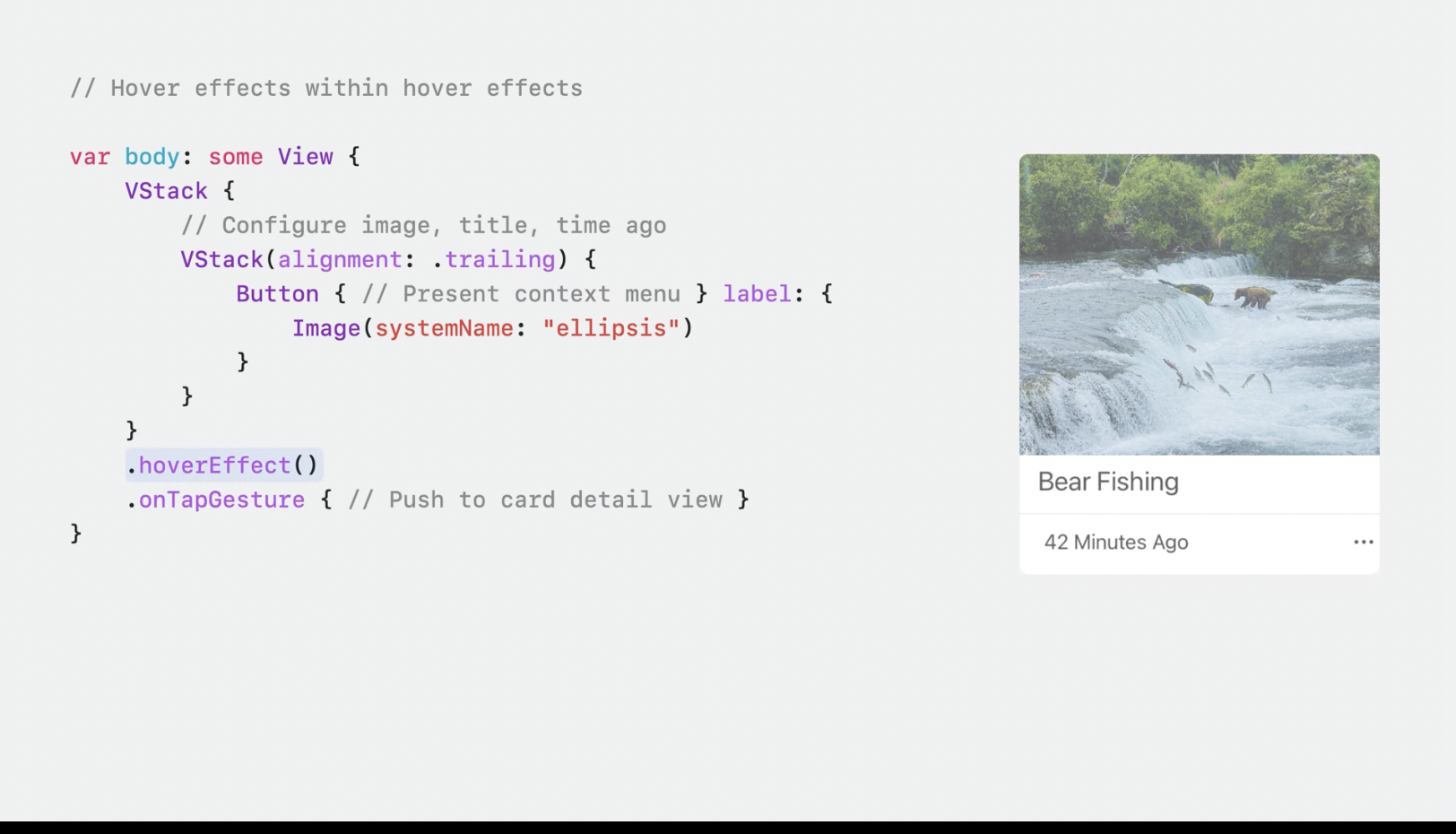

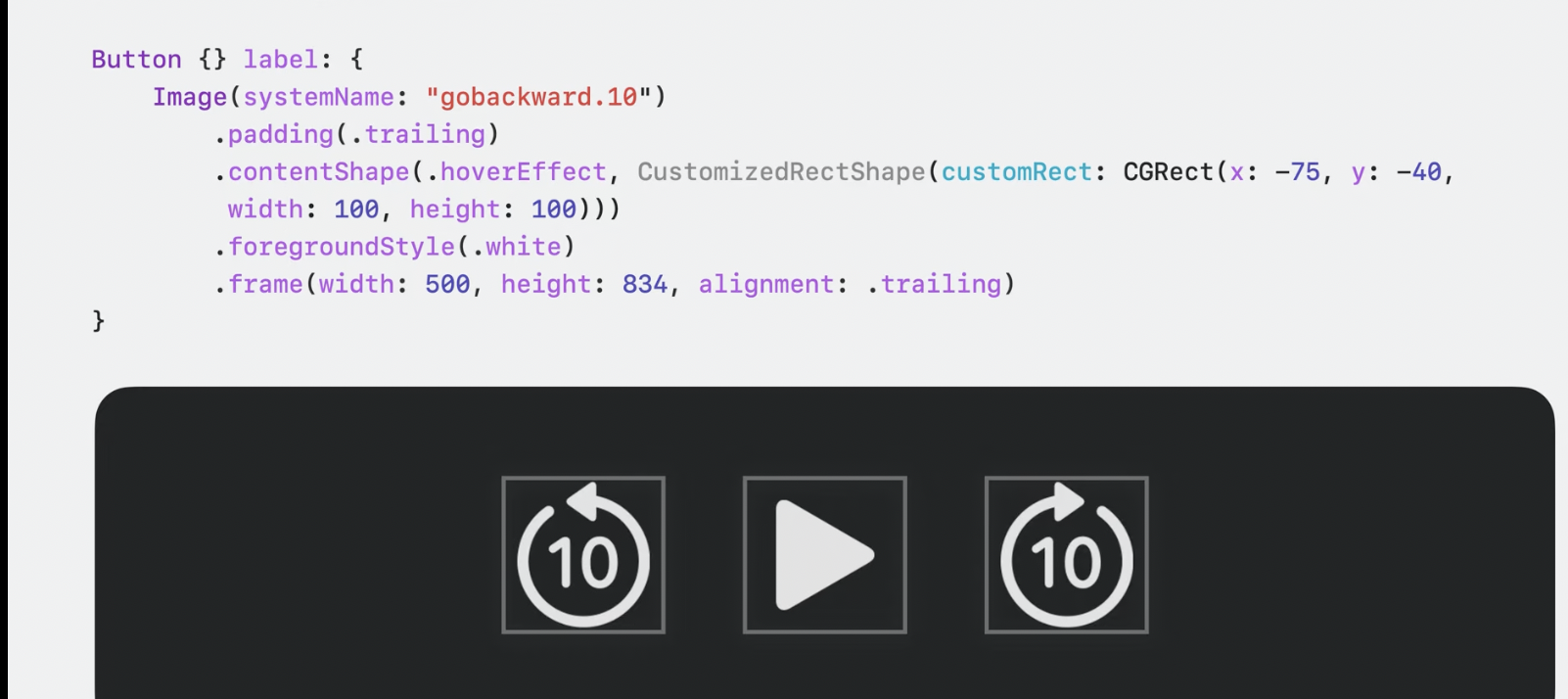

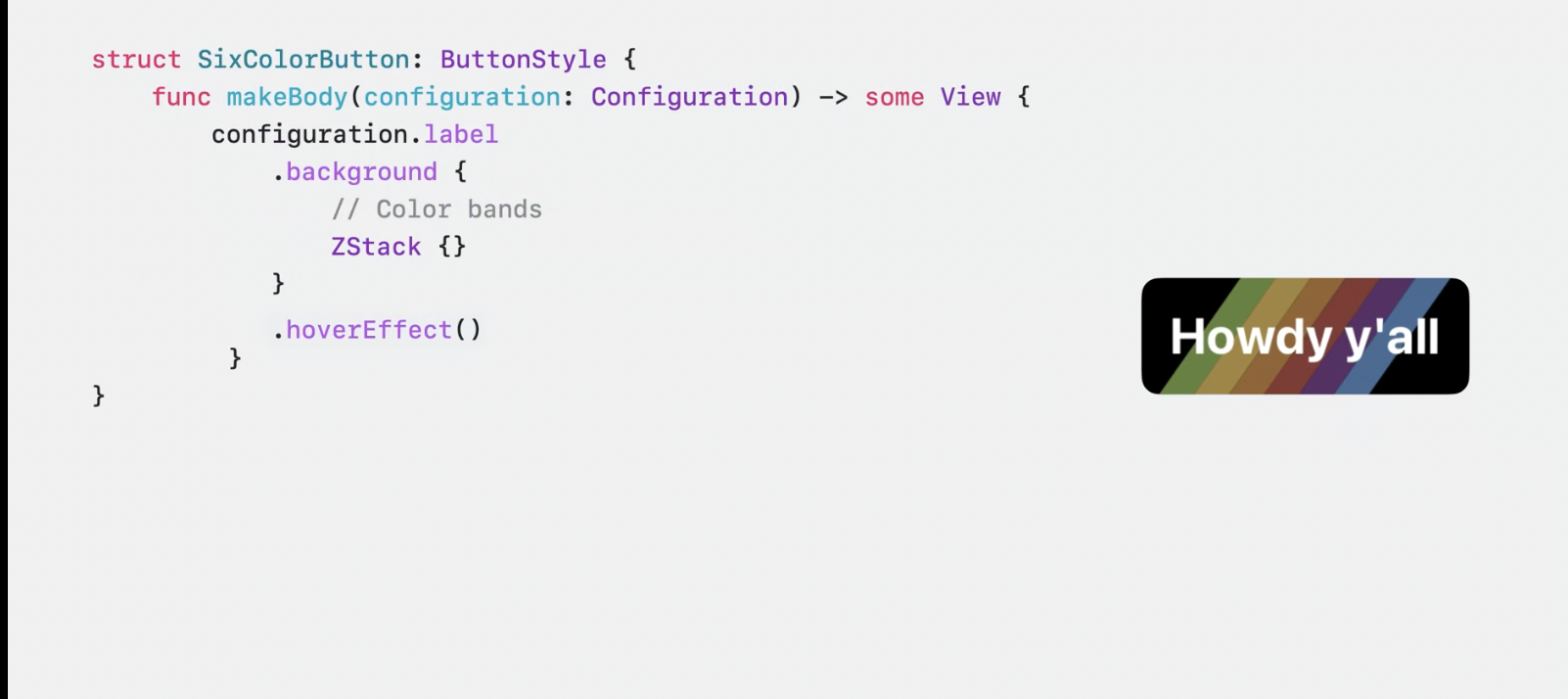

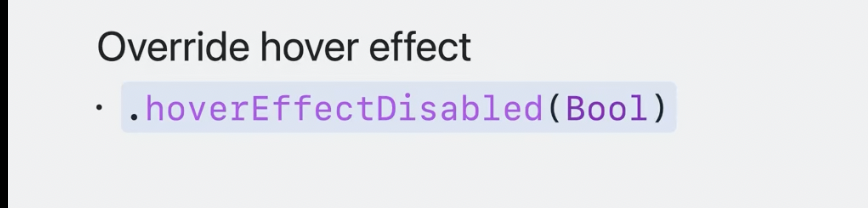

Hover Effect in xrOS

The system will inform which elements are intractable by initialing the hover effect. The hover effect is a customizable feature that visually displays a slight indication that an objects is intractable when a user looks at them.

Hover effect can be added to views, custom styling, disabled and configured to encompass a specific area

Alert View Distinctions in xrOS

An important distinction in xrOS is the way alert views are handled.

In xrOS alert views don’t present modally, nor do they require handling in order to continue use of the system

Biometric Security in xrOS

Anywhere where touch or Face ID was being used in iPad/iPhone app will be replaced with optic id

Look to dictate is supported

Look to dictate is a vision OS feature which allows for users to look at a search bar and a microphone will activate allowing for the user to speak in what should go in search bar. This functionality can be added to iPad/iPhone apps when used in xrOS

Depending on what capabilities your iPhone/iPad app supports should determine what features would need to be supported for xrOS

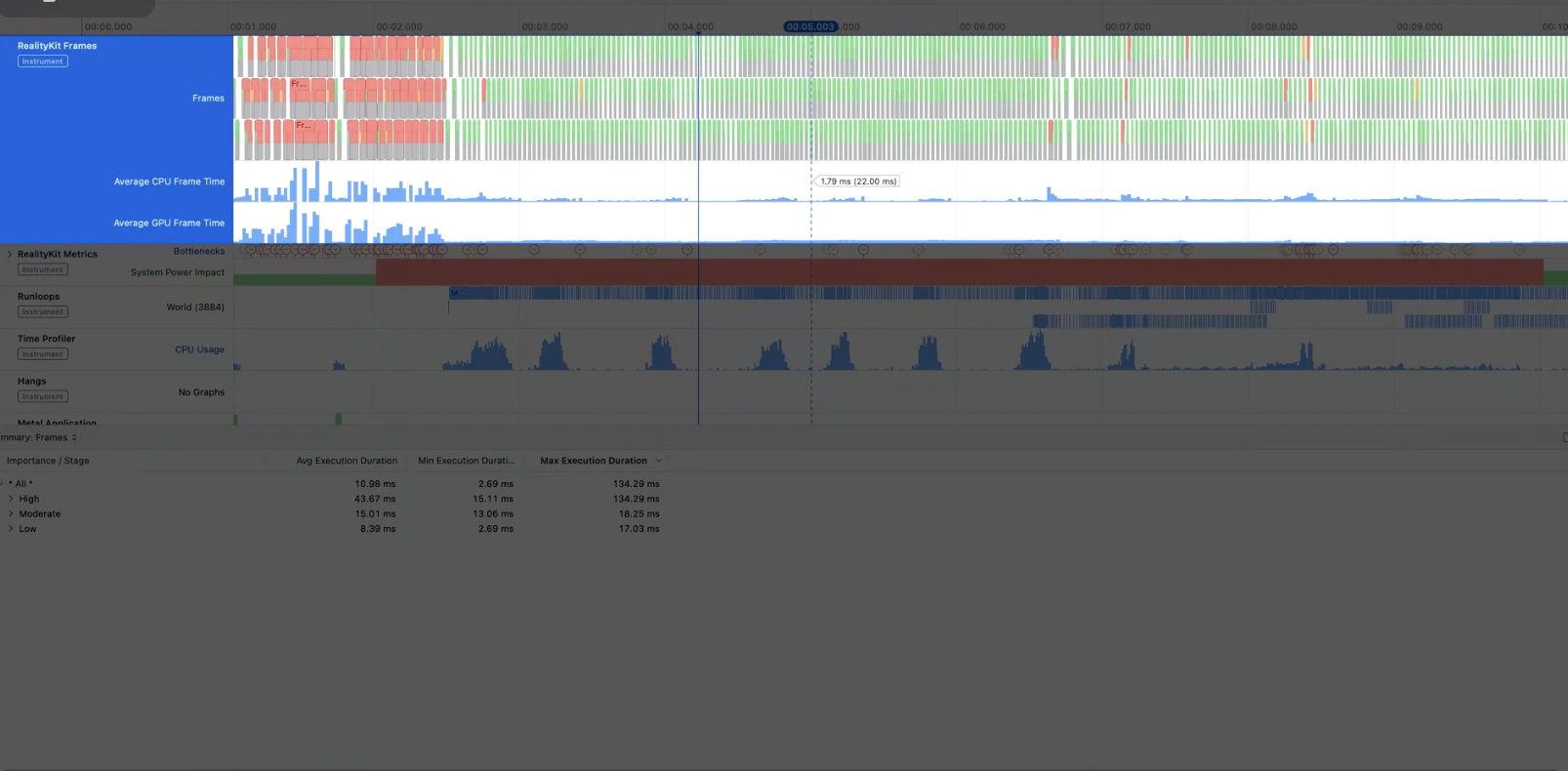

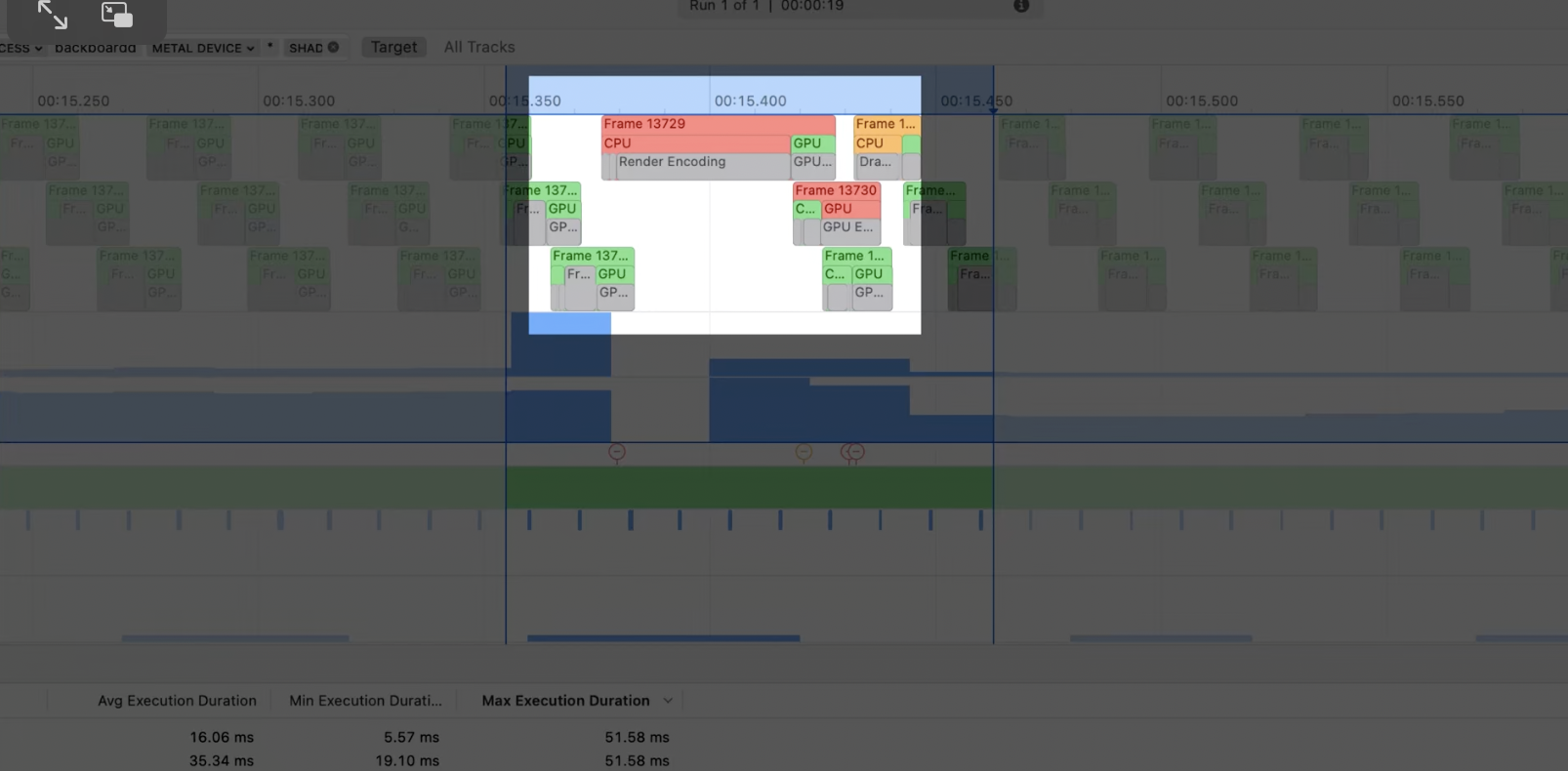

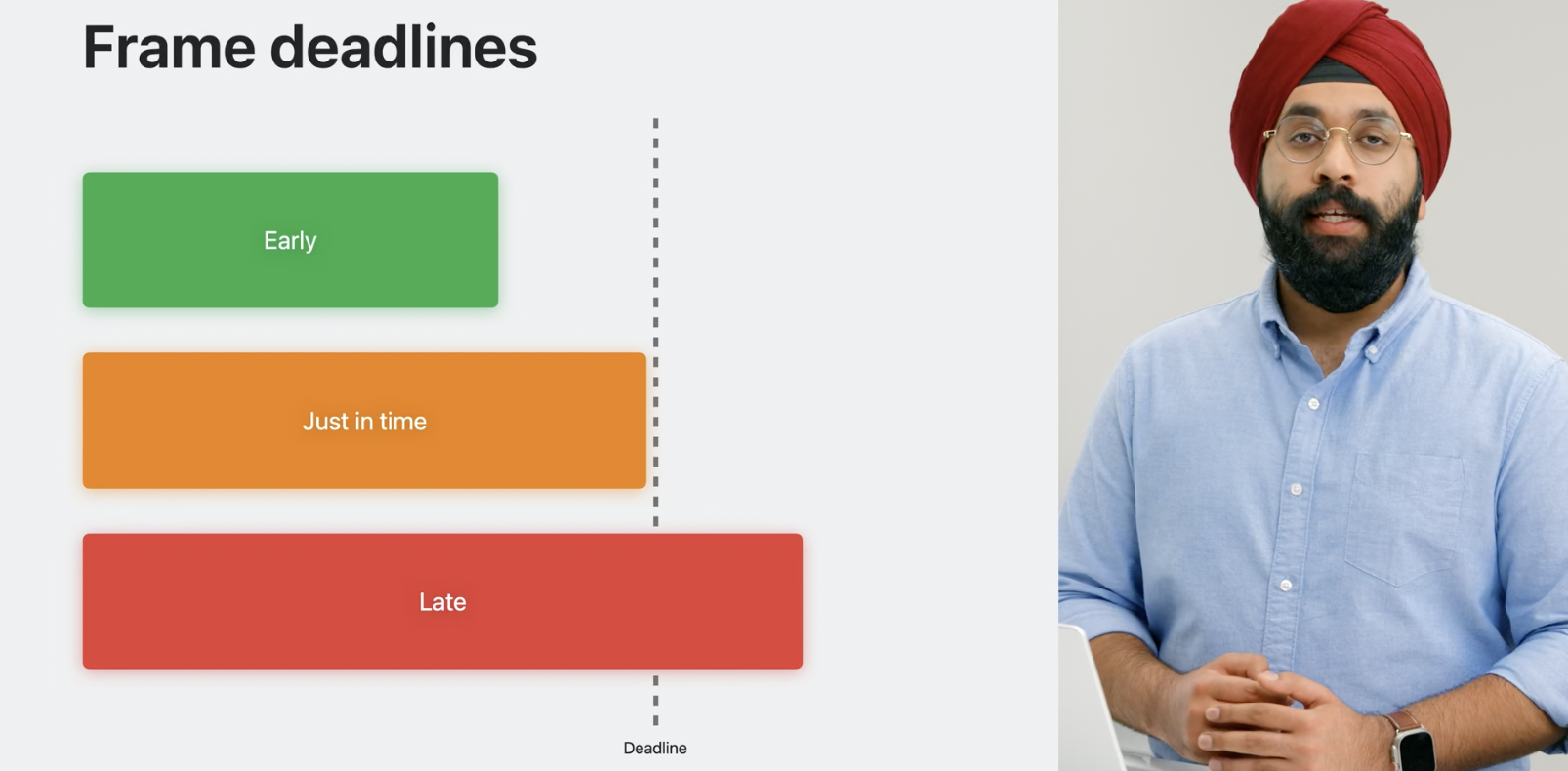

Analyzing Reality Kit Objects with Reality Kit Trace

Use Profiling in Xcode to find a suite of tools for testing and performance analyzation. RealityKit trace is a new tool to test and analyze the properties of 3D reality objects

RealityKit Trace, gives details on the frames produced by a reality kit object. A visual breakdown of the amount of frames, the cost of each frame, cpu/gpu usage, and status of frames are good for trouble shooting slow rendering objects. Frame states can be classified by early, just in time, and late based on the rendering speed. Slow rendering frames appear as red. Use chart to evaluate the frame/second rate. Apple advises a frame rate of 90 frames / second.

Reality Kit Trace offers a bottle neck tracer that highlights rendering time stamps where processing is the slowest. Use this for finding areas to optimize for faster rendering

The Core Animation render trace is an important area to monitor for expensive transactions. Core animation is described as one of the biggest causes for slow rendering. The Core Animation trace examines three components, Offscreen prepares, render passes, and UI meshes. Shadows, rounded rectangles and visual effects all intrigue to offscreen prepares

Optimizing Strategies in Vision OS

Below are list of strategies to optimize developing in visionOs

When positioning your Windows, avoid overlapping translucent windows. This prevents a scenario called over draw which is difficult for the system to render

Avoid unnesary view updates and redraws.. Views created with swiftUI observe published properties to trigger an update. Write your code in a way that the published propetires only update views when necessary

Reduce offscreen render passes by using shadows rounded rectangles, and visual effects with consideration to there expense on the system

Use physical based materials to optimize for lighting

When creating reality kit objects, use simple objects

When displaying entities, create them in advanced and hide/show them instead of them having to be constantly recreated in the view

When making network calls use async loading apis

When optimizing input performance use static colliders and keep updates below 8ms

Optimize AR Kit usage by using tracking mode to reduce anchor cost and minimizing persistent anchors

Optimize video display by using 24 - 30 hz videos, also avoid concurrent video playback

Optimize share play by turning off any features that aren’t necessary during that experience

Optimize and test your app for adverse temperature conditions using, thermal inducing

Optimize for memory by reducing memory ui allocations, and reducing texture and geometry memory

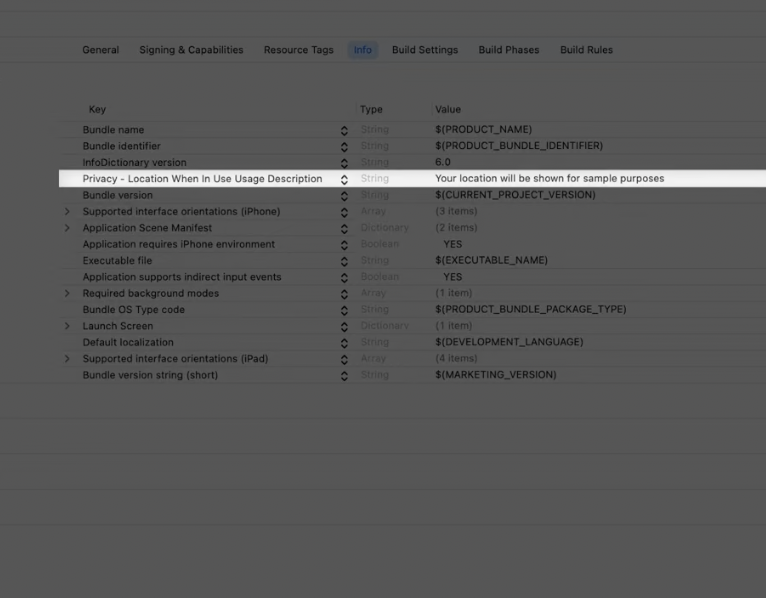

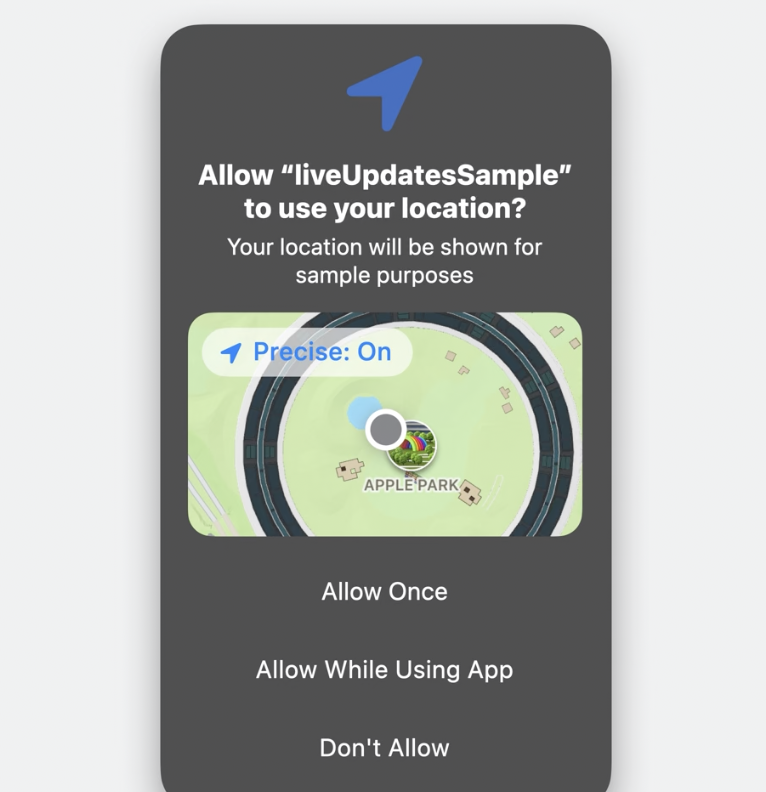

Using Core Location in spatial computing

Utilize core location, to allow your device to deliver location updates

Use code as a reference to have a device deliver location updates

Location services must be approved by user, approval will be triggered by adding the below privacy key in the plist

In the shared space, apps that send location updates must be looked at user before they will emit location updates

Using ARKit to track and perceive the visual world

Apples ARKit provides a toolkit for developers to use compute vision to help interpret the visual world. These tools are essential for positioning content, scene detection and biometric tracking

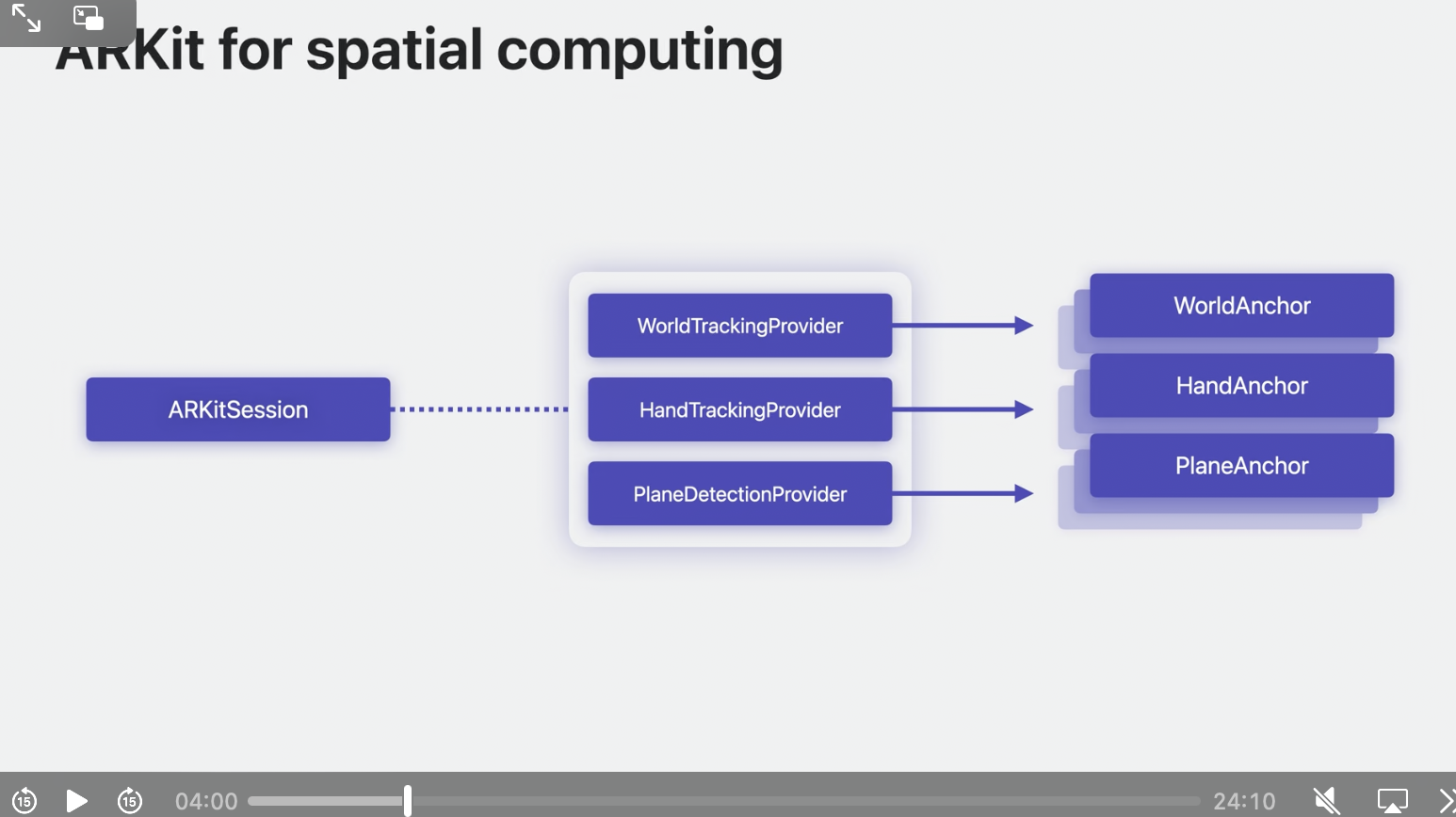

ARKit has been redesigned for spatial computing to access features in an easy way. ARKit in spatial computing can be broken down into three components.

Data Provider - Individual ala cart AR api services

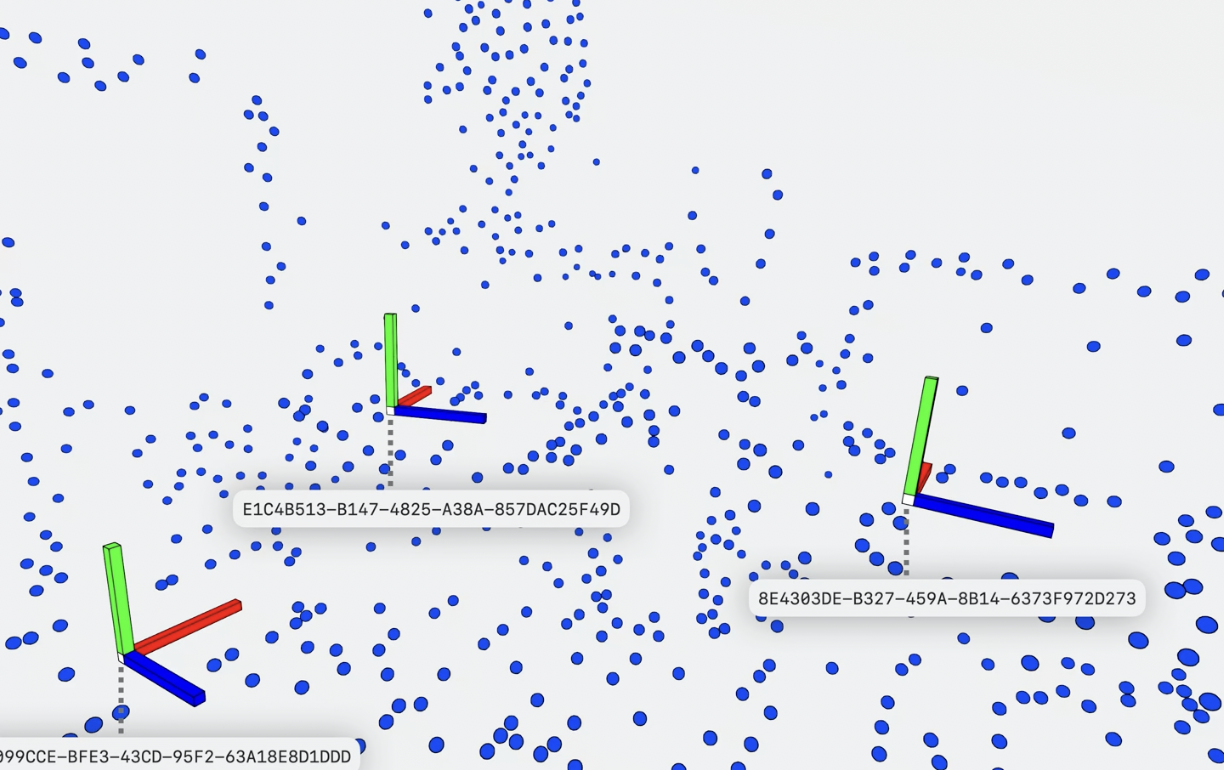

Anchors - Used to designate positions in the real world, for data providers and visual objects.

ARKit Session - Manages a defined group of data providers

Below are some of the features AR Kit provides to developers

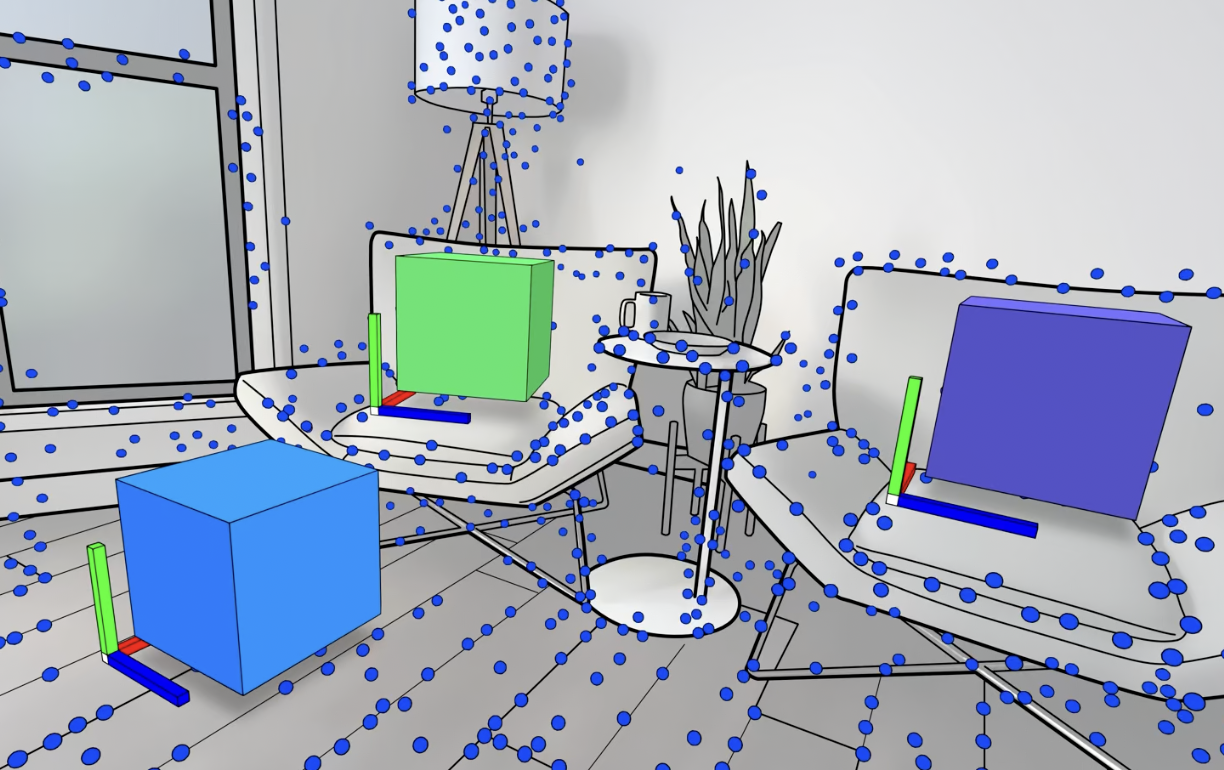

World Tracking adds world anchors for virtual content placement

This is important for keeping objects fixed to the real world

This is important for persistently keeping an object in real space. For example, if I had a 3D virtual book and I placed it on my desk, when I use visionOS the next day the virtual book in real space.

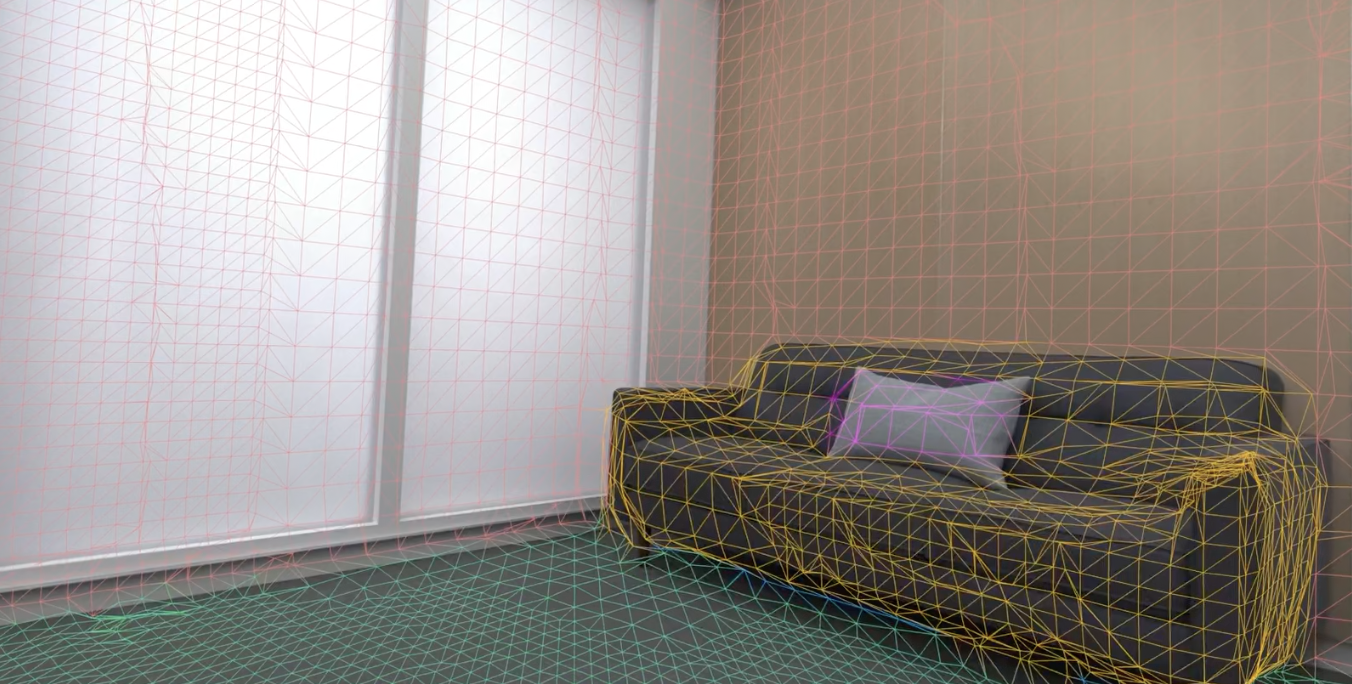

Scene understanding

Scene Understanding is important for integrating the surround the environment.

This can be broken down into three categories

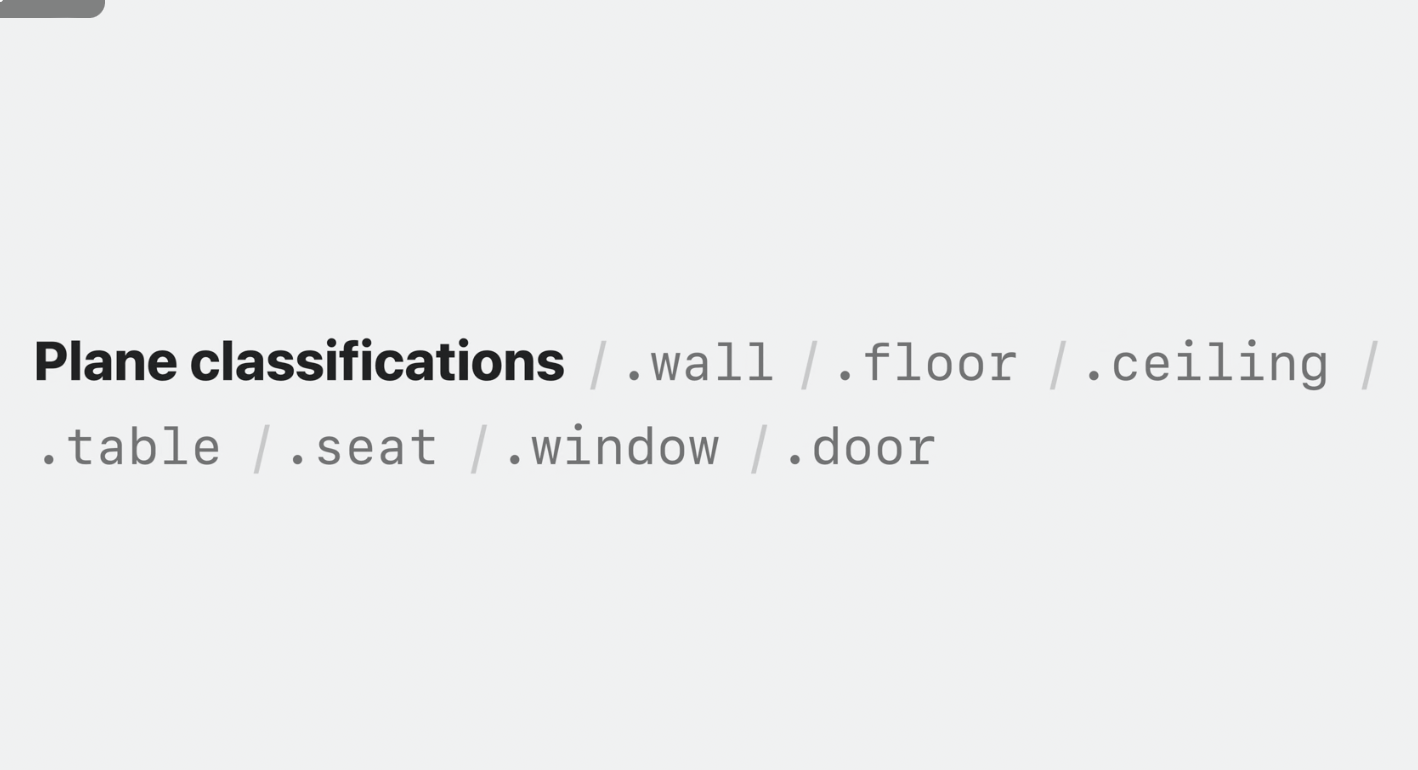

Plane Detection - allows to determine walls, tables, floors and other plane objects

Scene Geometry - determines physical dimensions and volumes of of content

Image Tracking - Detects pre defined images

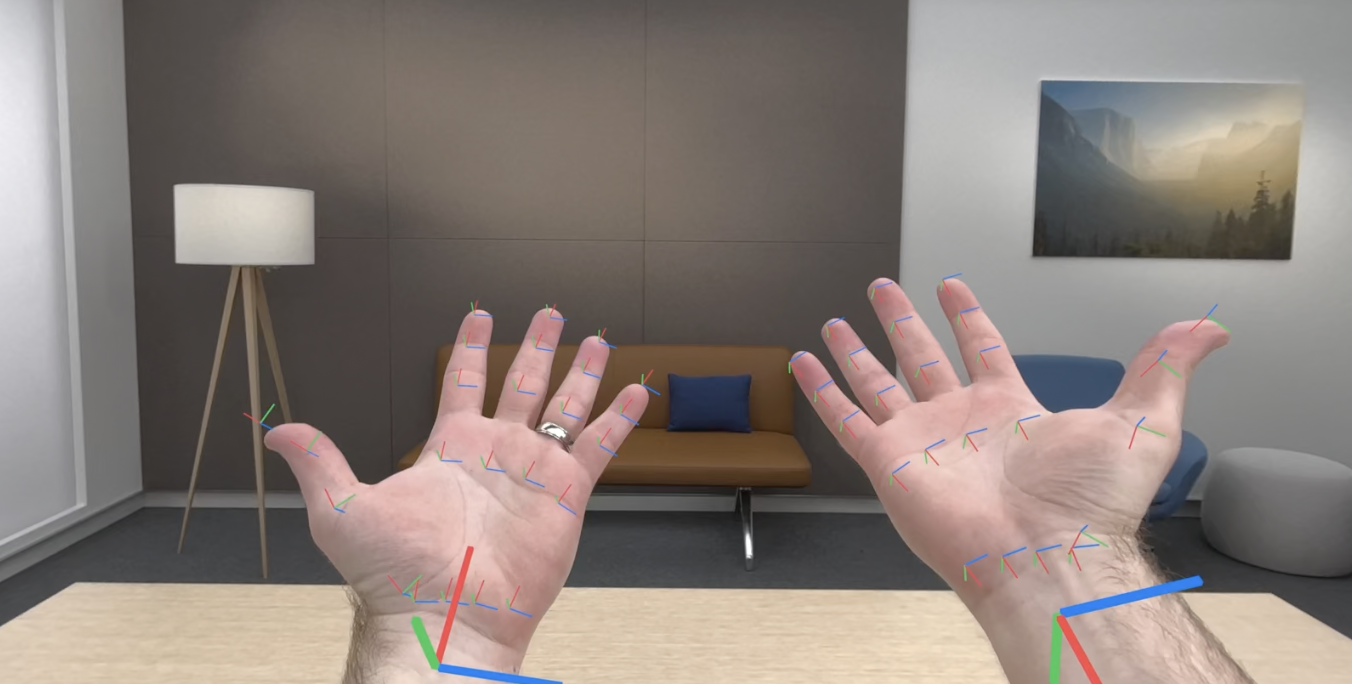

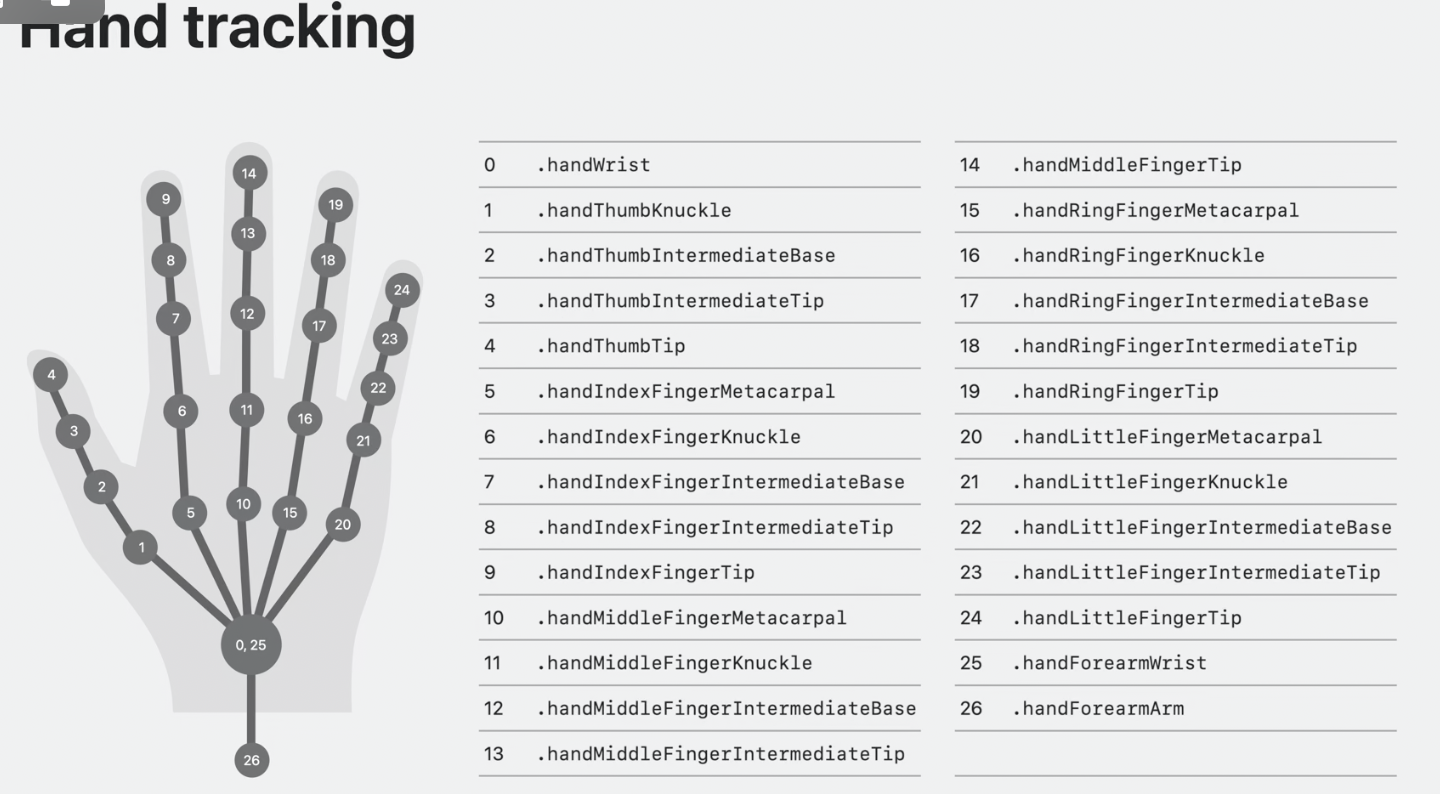

Hand Tracking is used to detect hands

Useful for content placement and detecting custom gestures

Below is an example app using ARKit hand tracking.

This Sample project allows you to create 3D cubes by snapping your fingers

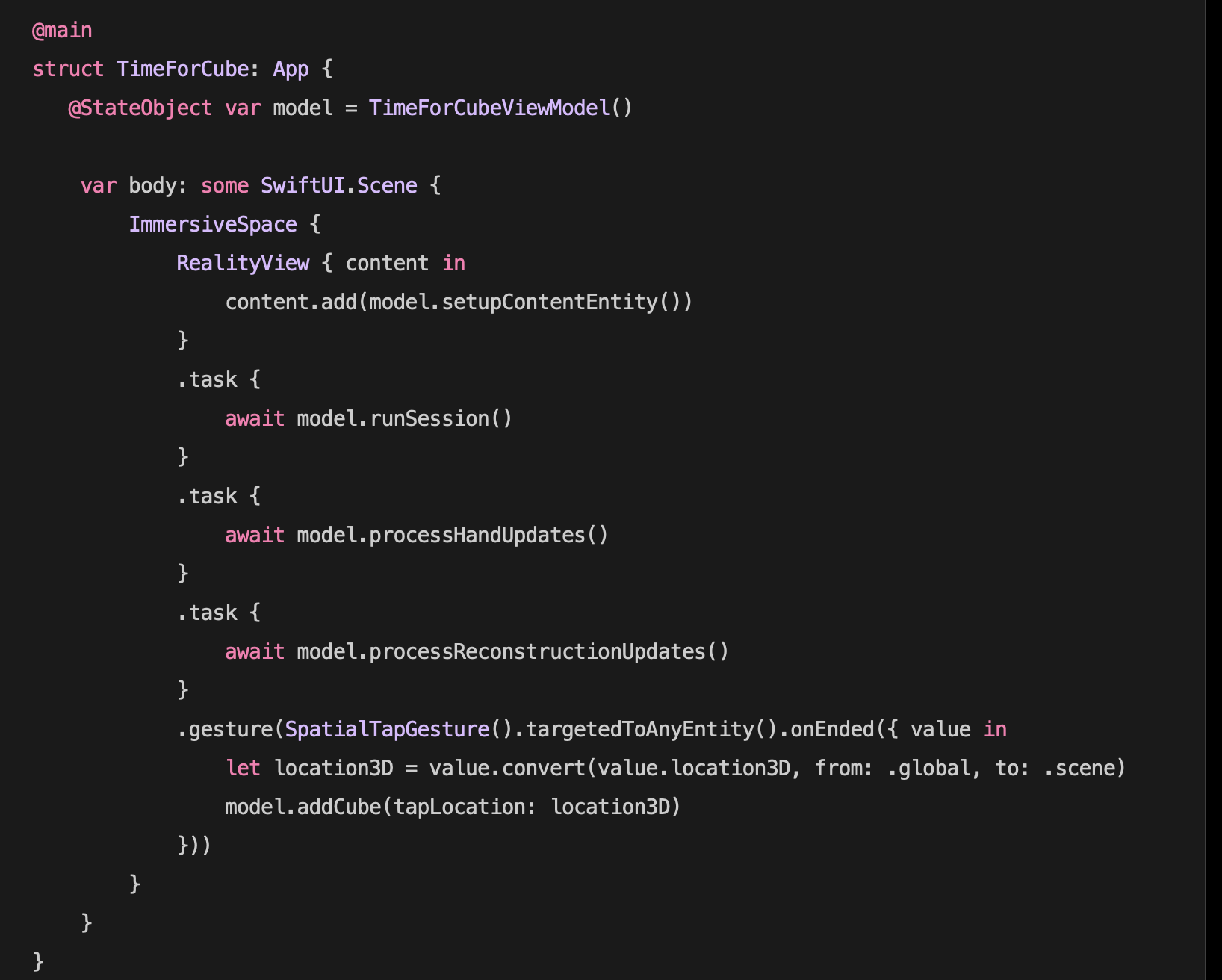

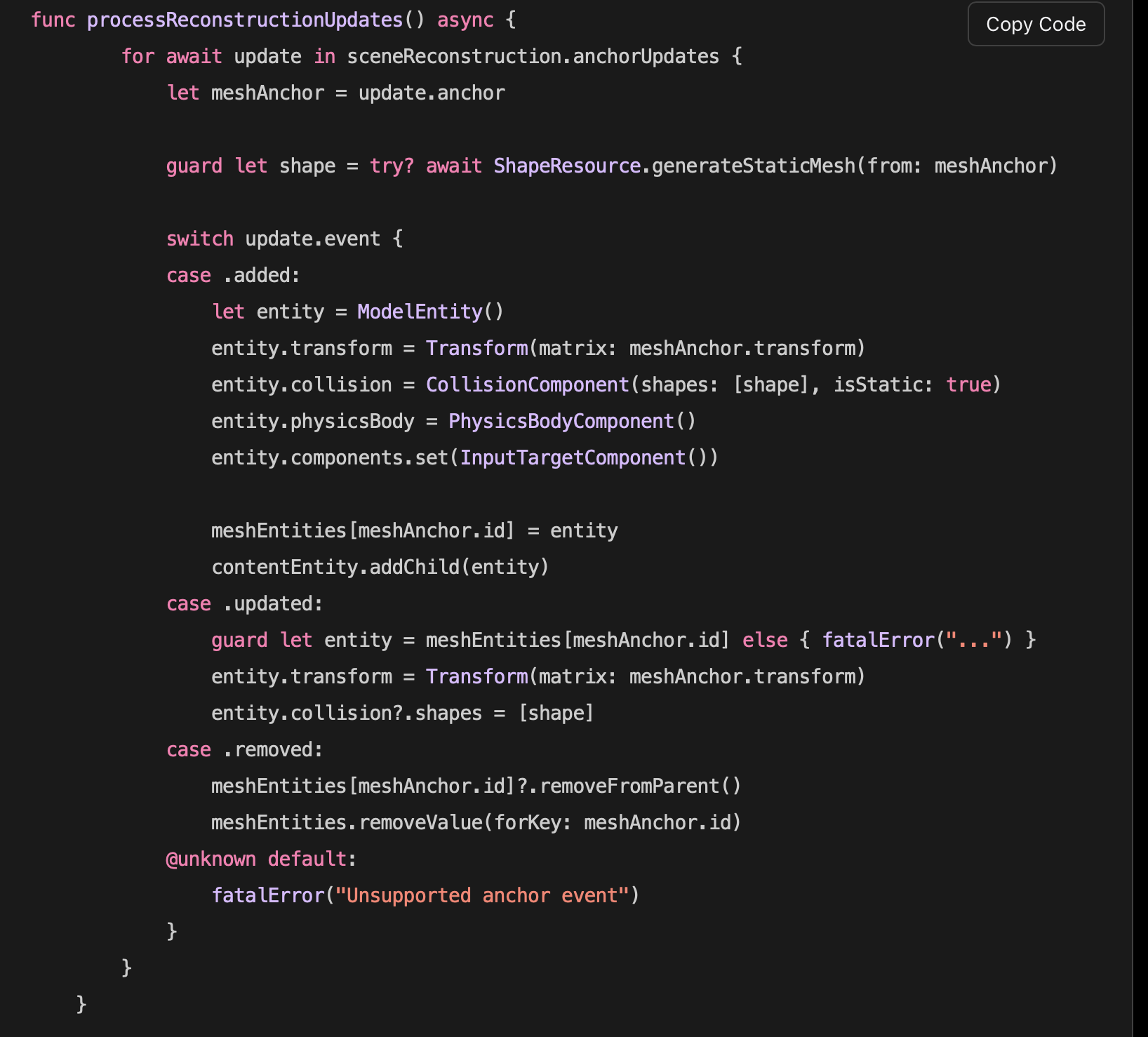

2nd Slide: The View The view adds any objects generated in reality view to the seeable environment. Also adds a tap gesture recognizer to any any 3D objects that triggers the creation of a cube when tapped. Lastly the view kicks off the initialization for for the ARKit services scene recognizer and hand tracking.

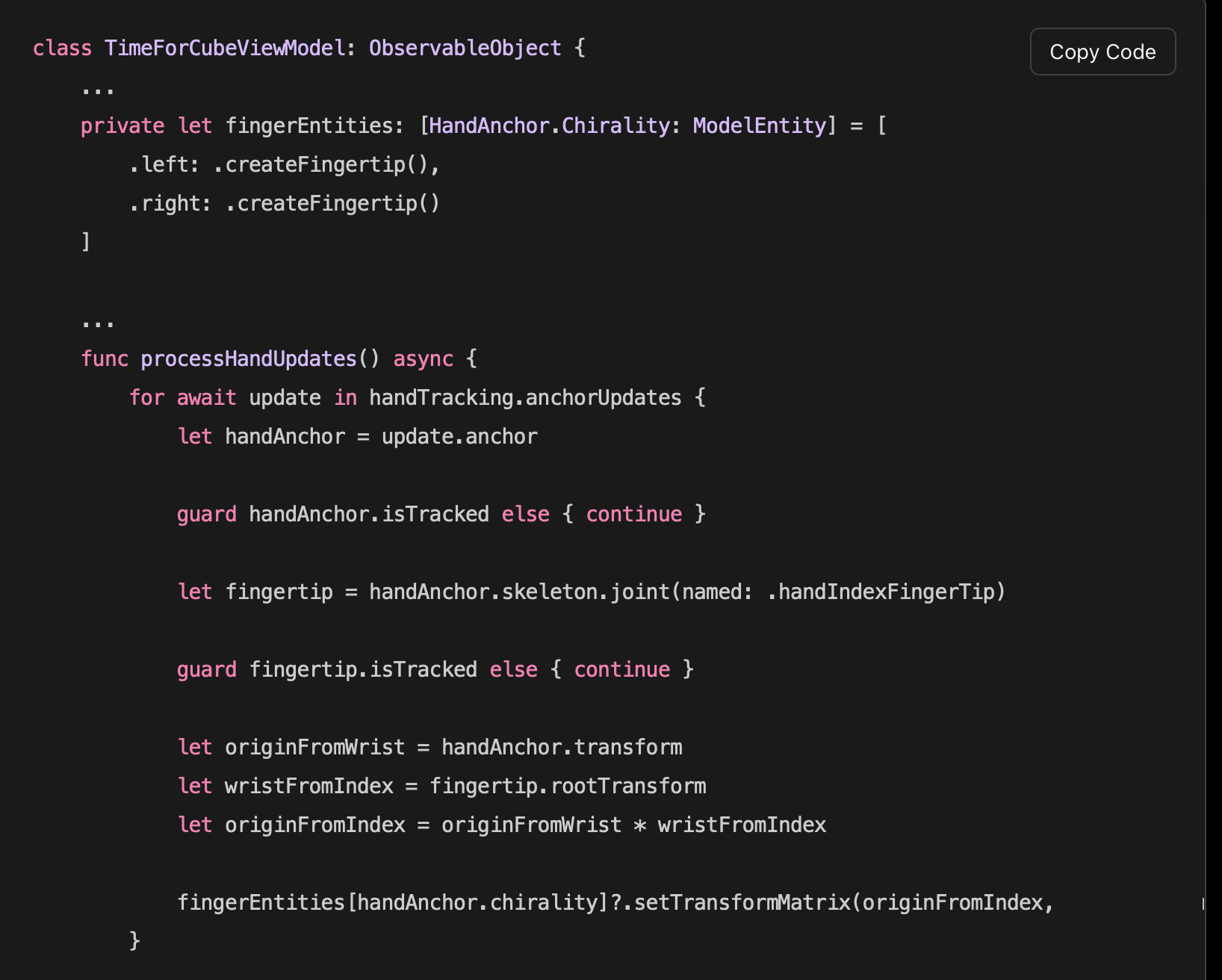

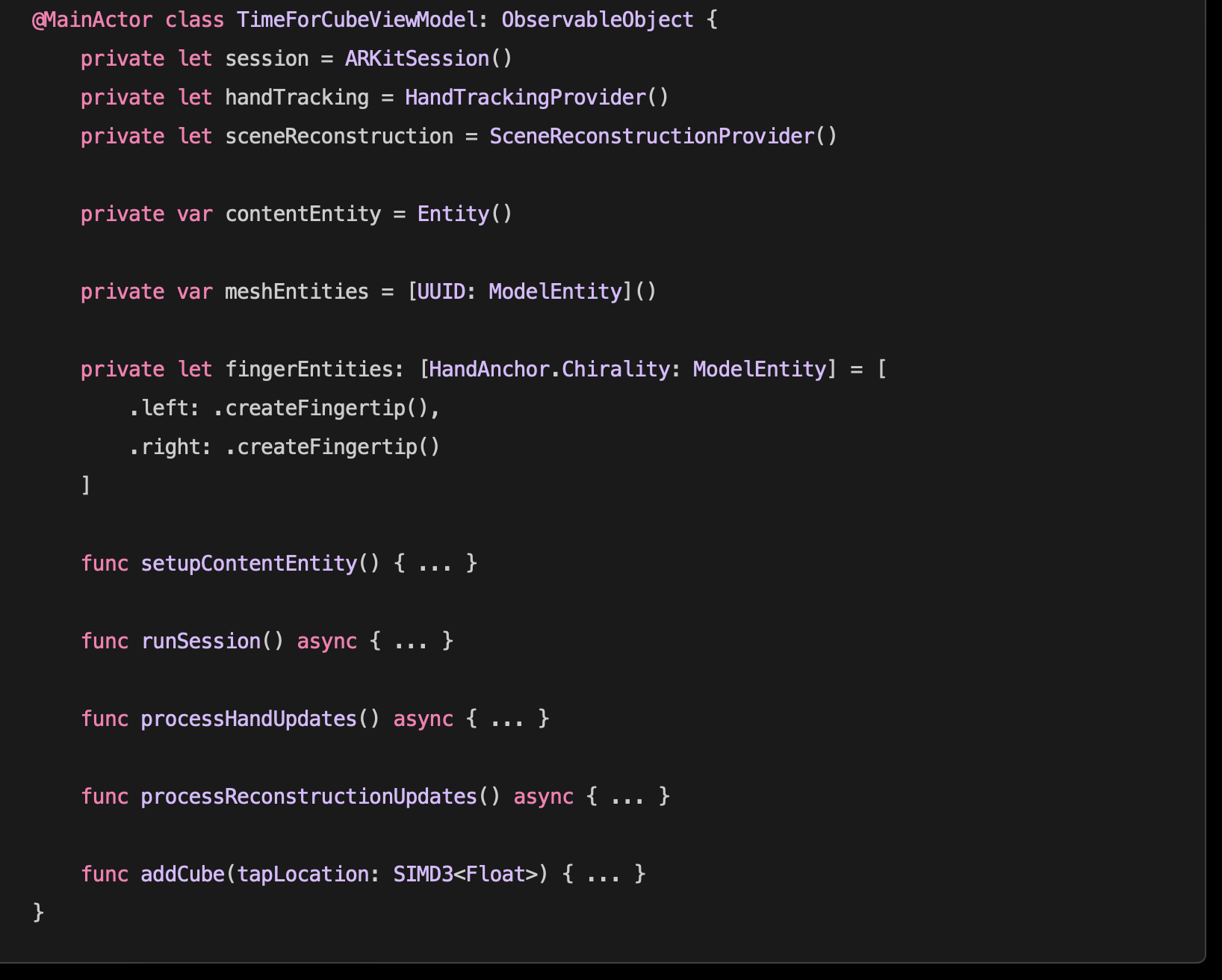

3rd Slide: The View Model

The view model contains properties to represent ARKit sessions for hand, tracking, scene understanding. Also contains functions to be called from hand tracking/ scene tracking updates and 3D cube creation

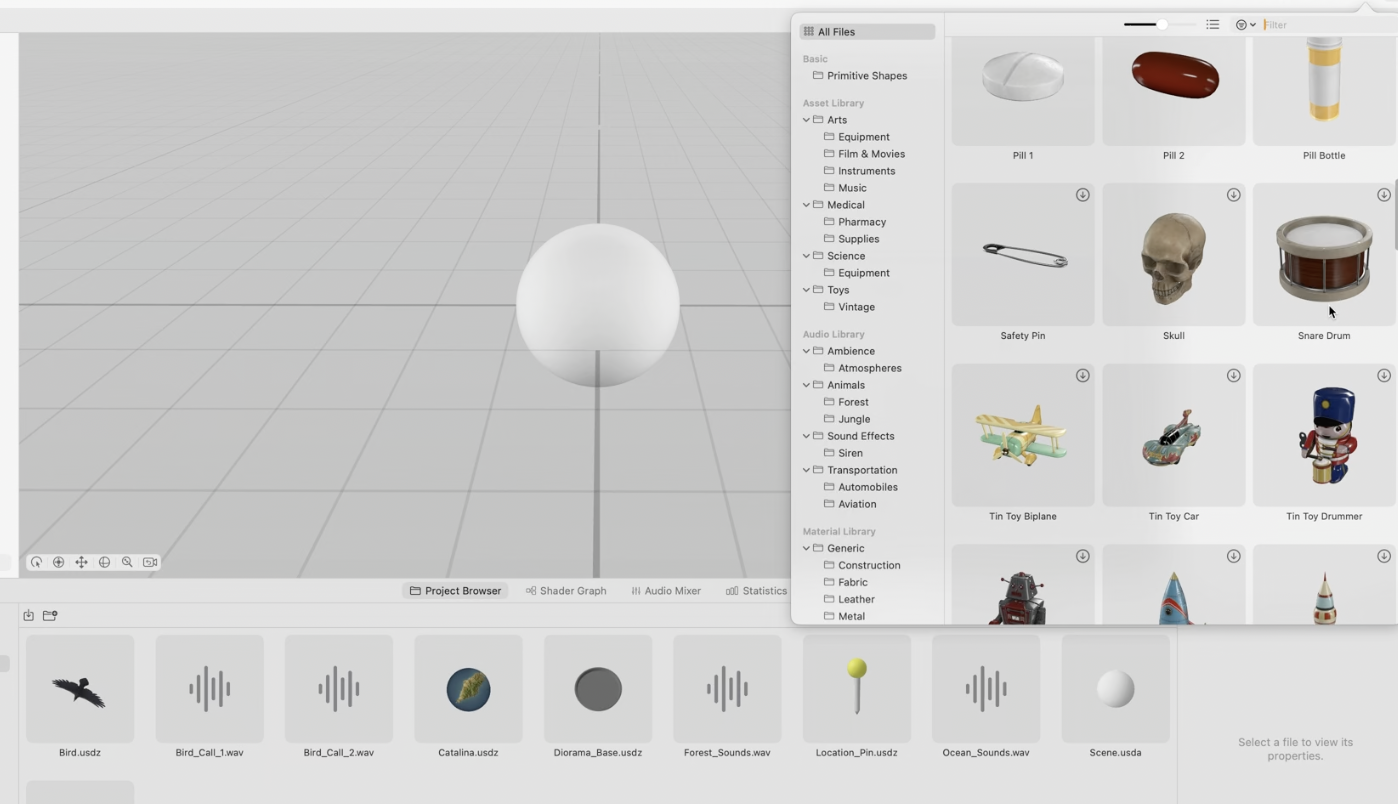

How To Use Reality Composer Pro

Reality Composer Pro is a tool used to create, edit and preview 3D content

Reality Composer Pro can be set up in two ways.

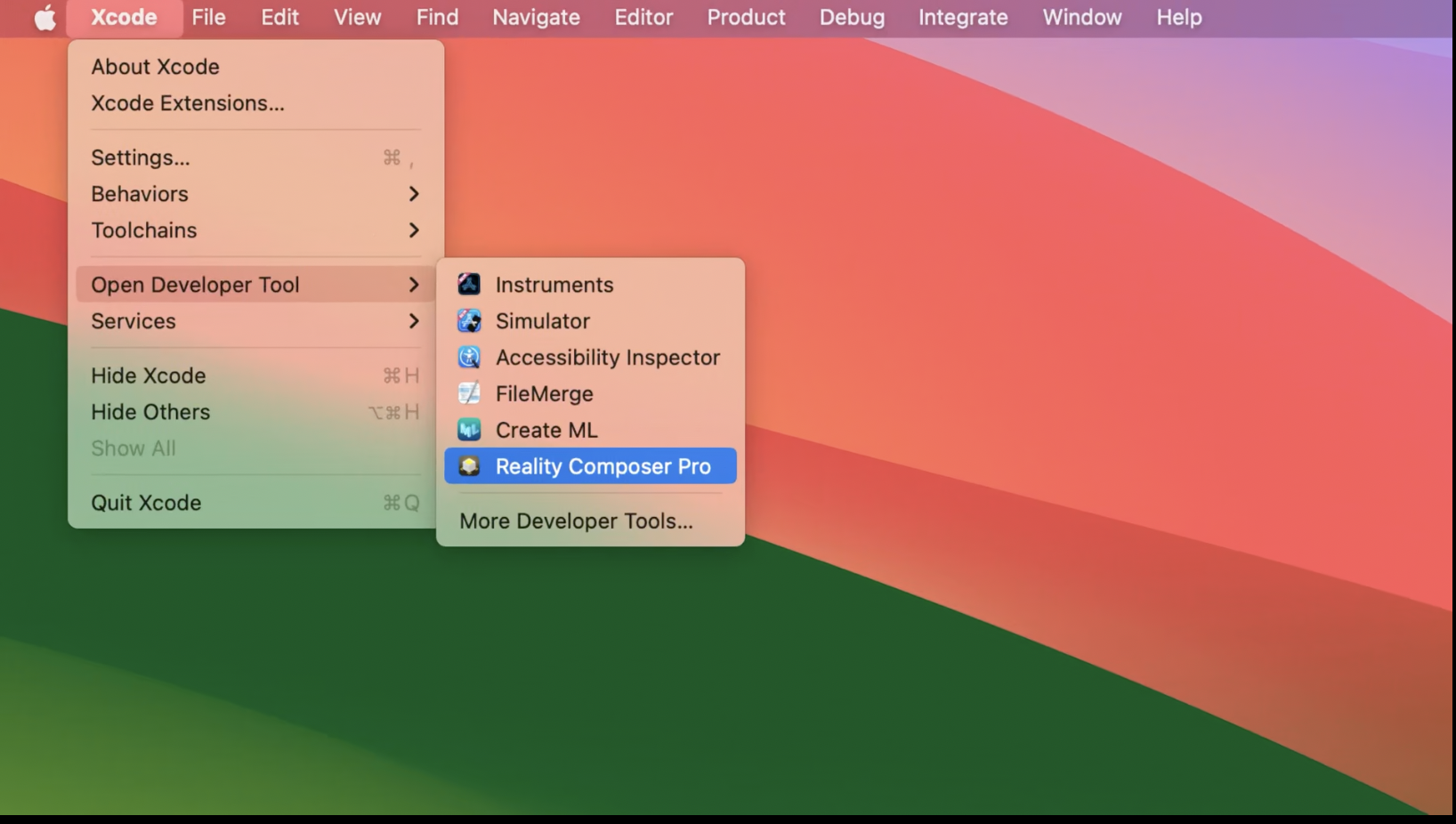

1. To Open Reality Composer Pro as a standalone, use the Xcode tool bar, to click developer tool, then Reality Composer pro

2. To have a project integrated with Reality Composer pro, create an Xcode project using an XR os template, this creates an default use, click on the filer and click the open reality compare pro button

Reality Composer Pro’s UI has 5 main components

Left Side Bar for navigation called hierarchy panel

Right Bar, used to edit properties of 3D objects

Main View, called view port. Used to vie 3D objects

Add Component Section, add reality kit components

Bottom panel, called editor panel, used as a project browser

Add assets to projects in 3 different ways

1. Drag and Drop content from project browser

2. Add content from content library

3. Use object capture to, upload a an image and RCP will generate the image

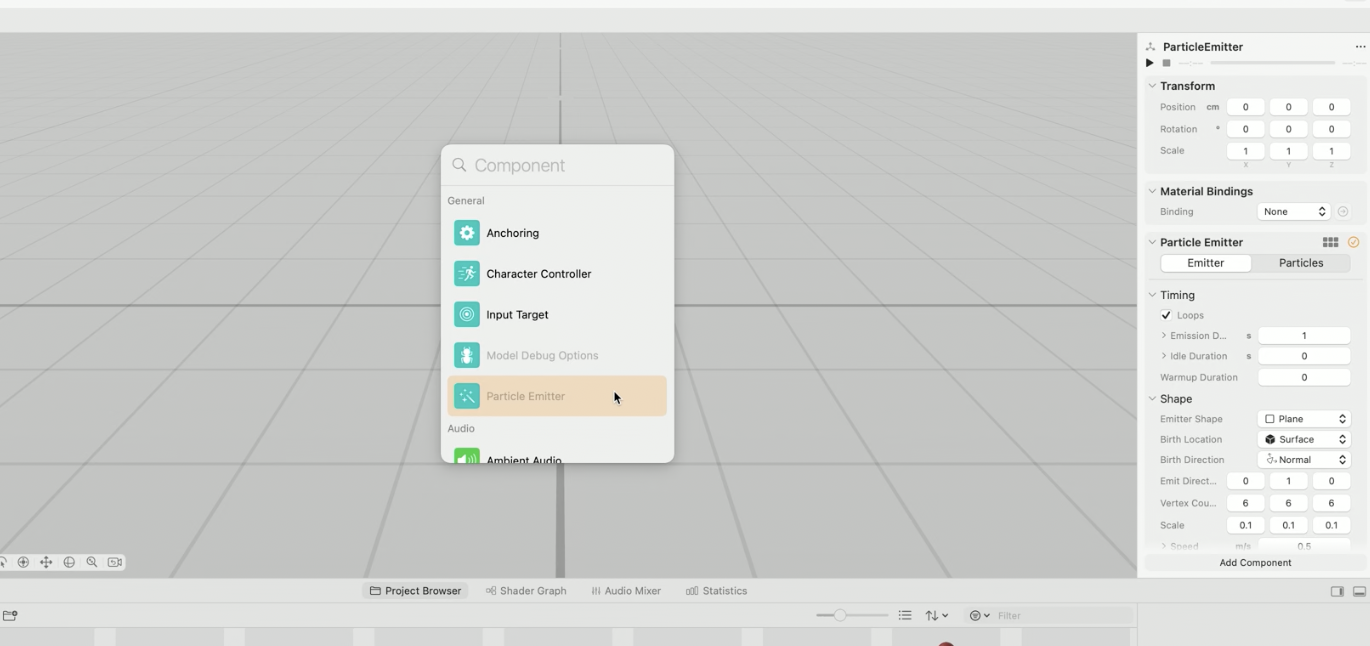

How to incorporate audio via Reality Composer Pro

Highlight a specific component, in the left navigator use click the plus component, to add spatial, ambient, or channel audio

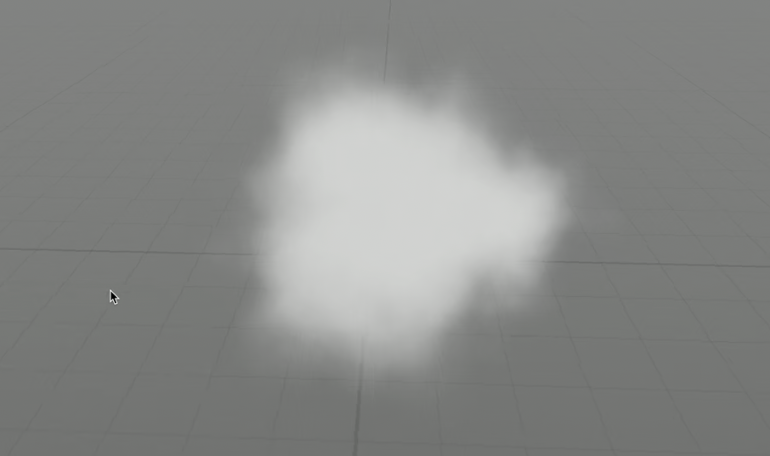

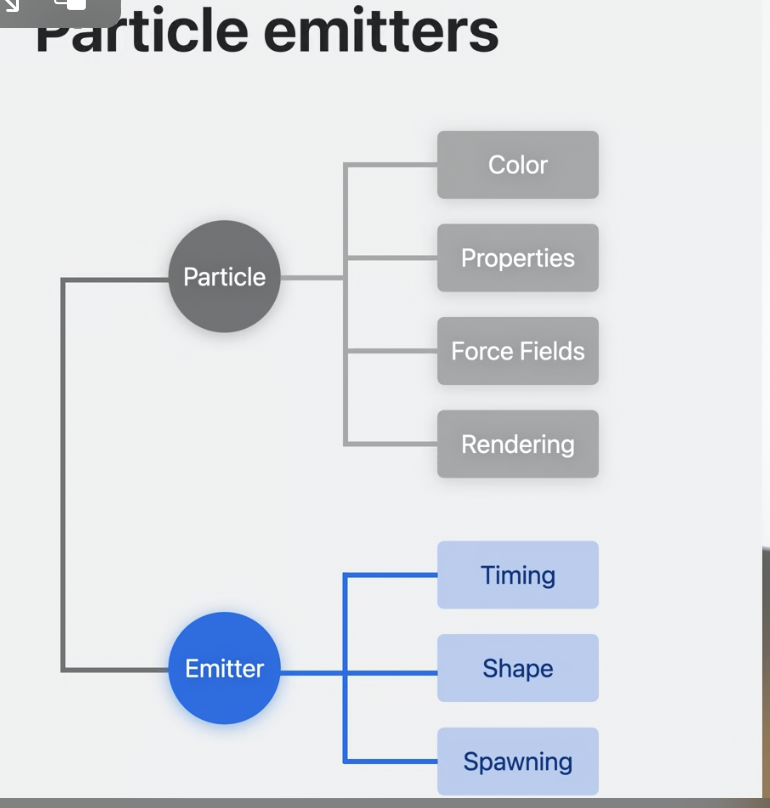

Add particle emitters via reality kit

Particle emitters can be used to create 3D objects that have visual dynamic properties.

Example, a flickering flame or a hovering cloud

Create Particle emitters by clicking the (+) button in the add components section.

Use the Inspector panel to edit the proprieties of a particle emitter to your liking

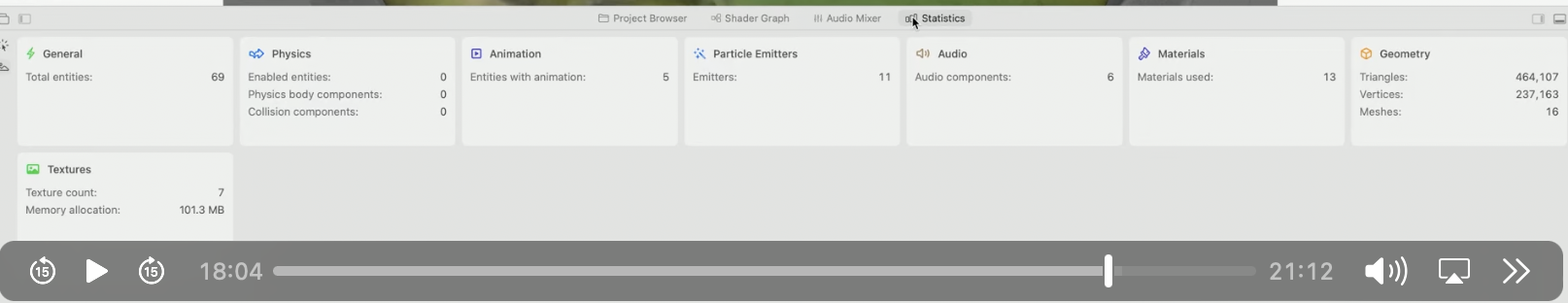

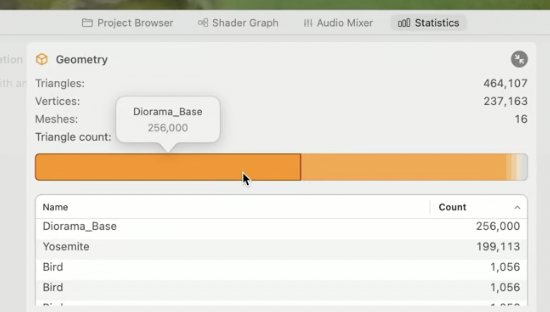

Using statistics to optimize reality composer scenes

Statistics inside Reality Composer pro offer metrics to various categories of a Reality Composer scene.

Use these statistics to observe output values that show areas of a scene that are a bottle neck for resource consumption.

Utilizing Safari Development Features

Utilize the developer features in safari to make sure app is optimized in web views

Use Web inspector to inspect and edit html elements create by your application

Open web inspector by using right click + inspect element

Use responsive design mode to preview web page in different layouts. If Xcode is installed located previews on different iOS devices can be viewed as well

Using Quick Look For Spatial Computing

What is quick look

Quick look is away to preview files safely in the apple ecosystem. Quick look is available in iOS, Mac OS, and now XR os. In non XrOS system quick look was a way to preview pictures, videos and other types of files without opening the entire file. Now in xrOs that same concept can be applied to digital assets

How to open a quick look

Quick Look can be used in xrOS by pinching, grabbing and dropping an image that represents a digital asset. Images that have quick look capability will be denoted with the cube quick look logo.

Using Quick Look

Once a quick look preview file is opened, a markup editor is available to add notes and details to the file

How to add a quick look link to an image

Add a quick look preview to a designated view by denoting the view with .quick look preview and providing a url to the appropriate usdz file

How are 3D models presented in quicklook?

Like other 3D content in xrOS, 3D object are presented in volume

3D model rendered will be placed in the center of the volume automatically oriented towards you

Window bar available for reposition

Size of 3D object is determined by usdz file

Ground plan and shadows will be automatically added for 3D content

How To Create 3D models for quick look

3D models can be created by 3rd party digital creation studios, scanning 3D models in reality kit, or if using a room plan, the room plan api

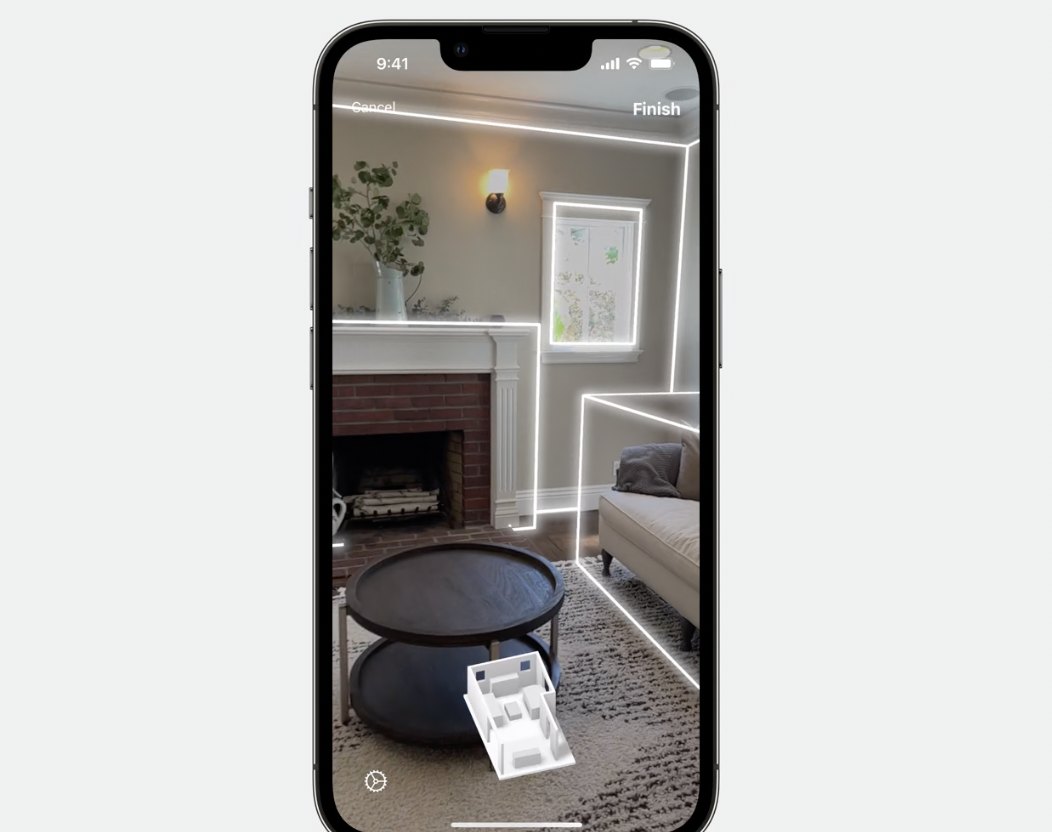

How to use room plan api

The room plan api, is an apple provided api that allows you to scan a room, and it will generate a usdz file representing a digital 3d object for your room

Understanding Reality Composer Pro

Reality Composer Pro is a tool you can use to design, edit 3D content, meant to be deployed in visionOS

RCP can be launched directly through developer tools or through a code to link in xcode. When launching via the code link Reality Kit will appear as a swift package

Tour of the Reality Composer Pro Software

Viewport

Displays 3D objects that are in the current scene

Hierarchy Panel

Panel on the left side of the studio app that shows the hierarchal layout of 3D objects in the current scene. Use to sort and arrange the objects

Inspector Panel

Panel on the right side of the studio app that allows you to edit attributes of the selected object

Editor panel

Panel on the bottom of the studio app that shows all imported objects in current scene

Creating a scene in reality composer pro